4 Trees

This chapter presents some of the tree methods used for classification or regression problems. After presenting some data that will be used to illustrate the methods, it shows how decision trees work. Then, it present two ensemble methods based on decision trees: bagging and random forests. It is built using two main references: James et al. (2021), Boehmke and Greenwell (2019).

4.1 Data Used in the Notebook

To illustrate how to use tree-based methods, we will rely on Seoul bike sharing demand data set (Sathishkumar, Jangwoo, and Yongyun 2020 ; Sathishkumar and Yongyun 2020) freely available on the UCI Machine Learning Repository.

The data give the number of bicycles rented each hour from December 1st, 2017 to November 30th, 2018. It contains 8,760 observations. Some characteristics are made available on each day:

date: the date (day/month/year)Rented Bike Count: number of bicycles rentedHour: hourTemperature(°C): temperature in Celcius degreesHumidity(%): percentage of humidityWind speed (m/s): wind speed in metres per secondVisibility (10m): visibility at 10 metreDew point temperature(°C): dew point temperature in Celcius degrees, i.e., temperature to which air must be cooled to become saturated with water vapourSolar Radiation (MJ/m2): solar radiation in megajoules per square metreRainfall(mm): rainfall in millimetresSnowfall (cm): snowfall in centimetresSeasons: season (Spring, Summer, Automn, Winter)Holiday: holiday (Holiday, No Holiday)Functioning Day: functioning day of the bicycle rental service (Yes, No)

We will need to use many functions from the packages of the tidyverse environment.

library(tidyverse)A copy of the dataset is available on my website. The CSV file can directly be loaded in R as follows:

url <- "https://egallic.fr/Enseignement/ML/ECB/data/SeoulBikeData.csv"

bike <- read_csv(url, locale = locale(encoding = "latin1"))There are a few days during which the rental service is not functioning:

table(bike$`Functioning Day`)##

## No Yes

## 295 8465For simplicity, let us remove the few observations for which the service is not functioning.

bike <-

bike %>%

filter(`Functioning Day` == "Yes") %>%

select(-`Functioning Day`)The name of the variables is not convenient at all to work with. Let us rename the variables:

bike <-

bike %>%

rename(

"date" = `Date`,

"rented_bike_count" = `Rented Bike Count`,

"hour" = `Hour`,

"temperature" = `Temperature(°C)`,

"humidity" = `Humidity(%)`,

"wind_speed" = `Wind speed (m/s)`,

"visibility" = `Visibility (10m)`,

"dew_point_temperature" = `Dew point temperature(°C)`,

"solar_radiation" = `Solar Radiation (MJ/m2)`,

"rainfall" = `Rainfall(mm)`,

"snowfall" = `Snowfall (cm)`,

"seasons" = `Seasons`,

"holiday" = `Holiday`

)There may be some seasonality in the data depending on: the hour, the day of the week, or the month of the year. While the hour is already given in the hour variable, the other component are not. Let us transform the date column in a date format. Then, the month and the day of the week can easily be extracted using functions from {lubidate}. We will provide the month name and the name of the week day in English. Depending on our settings, the functions month() and wday() from {lubridate} may give different outputs. We will thus make sure to set the time locale to English. The name of the locale is system dependent: on Unix, we can use "en_US", while on Windows, we can use "english_us".

loc_time_english <-

ifelse(.Platform$OS.type == "unix", "en_US", "english_us")library(lubridate)The new variables can be created as follows:

bike <-

bike %>%

mutate(

date = dmy(date),

year = year(date),

month = month(date, label = TRUE, locale = loc_time_english),

month = factor(as.character(month),

levels = c("Jan", "Feb", "Mar", "Apr",

"May", "Jun", "Jul", "Aug",

"Sep", "Oct", "Nov", "Dec")),

week_day = wday(date, label = TRUE, locale = loc_time_english),

week_day = factor(as.character(week_day),

levels = c("Mon", "Tue", "Wed",

"Thu", "Fri", "Sat", "Sun")),

seasons = factor(seasons, levels = c("Spring", "Summer",

"Autumn", "Winter"))

)Now, let us have a look at some summary statistics. First, we shall consider our target variable, i.e., the hourly number of bikes rented:

summary(bike$rented_bike_count)## Min. 1st Qu. Median Mean 3rd Qu. Max.

## 2.0 214.0 542.0 729.2 1084.0 3556.0On average, there are 729 bikes rented per hour in Seoul over the considered period (December 2017 to November 2018).

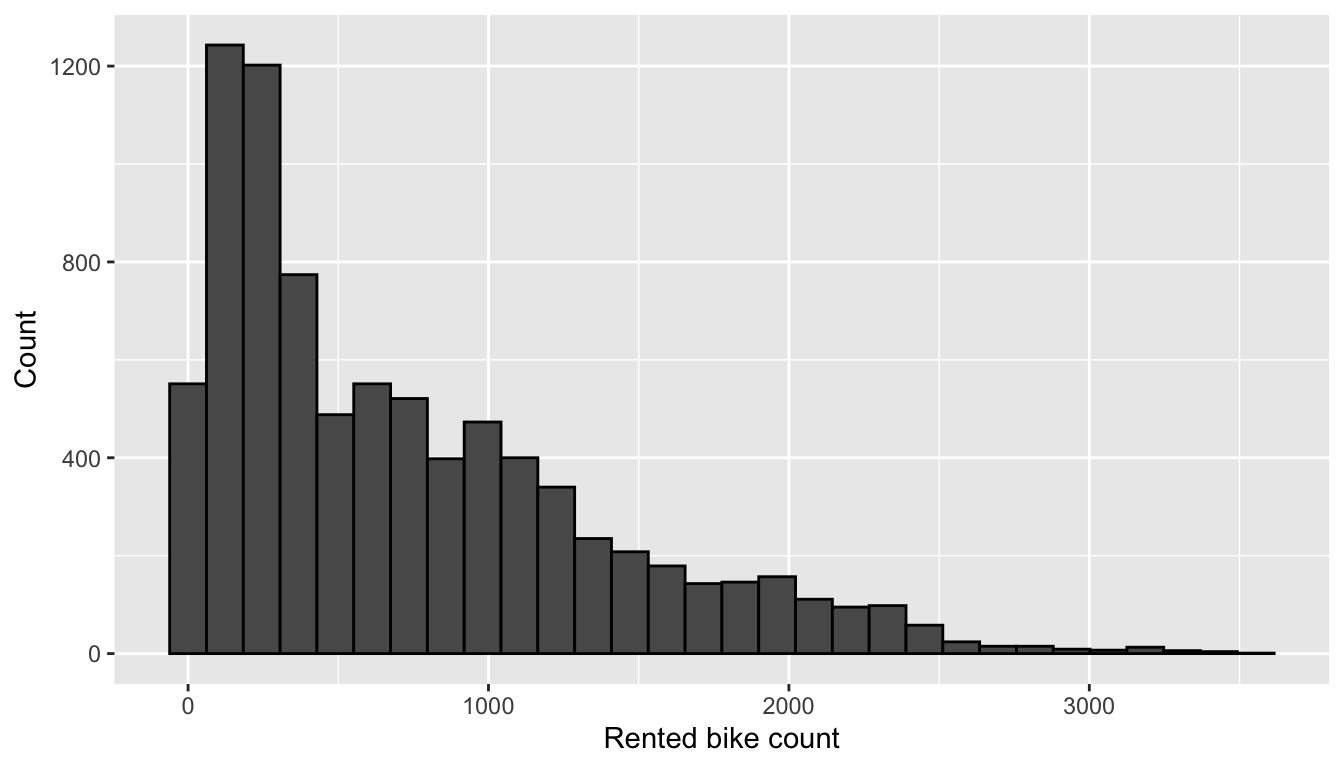

We can have an idea of the distribution by plotting a histogram:

ggplot(data = bike, mapping = aes(x = rented_bike_count)) +

geom_histogram(colour = "black") +

labs(x = "Rented bike count", y = "Count")

Figure 4.1: Distribution of rented bike count.

The distribution is skewed. Let us look at the distribution of the target variable depending on the month:

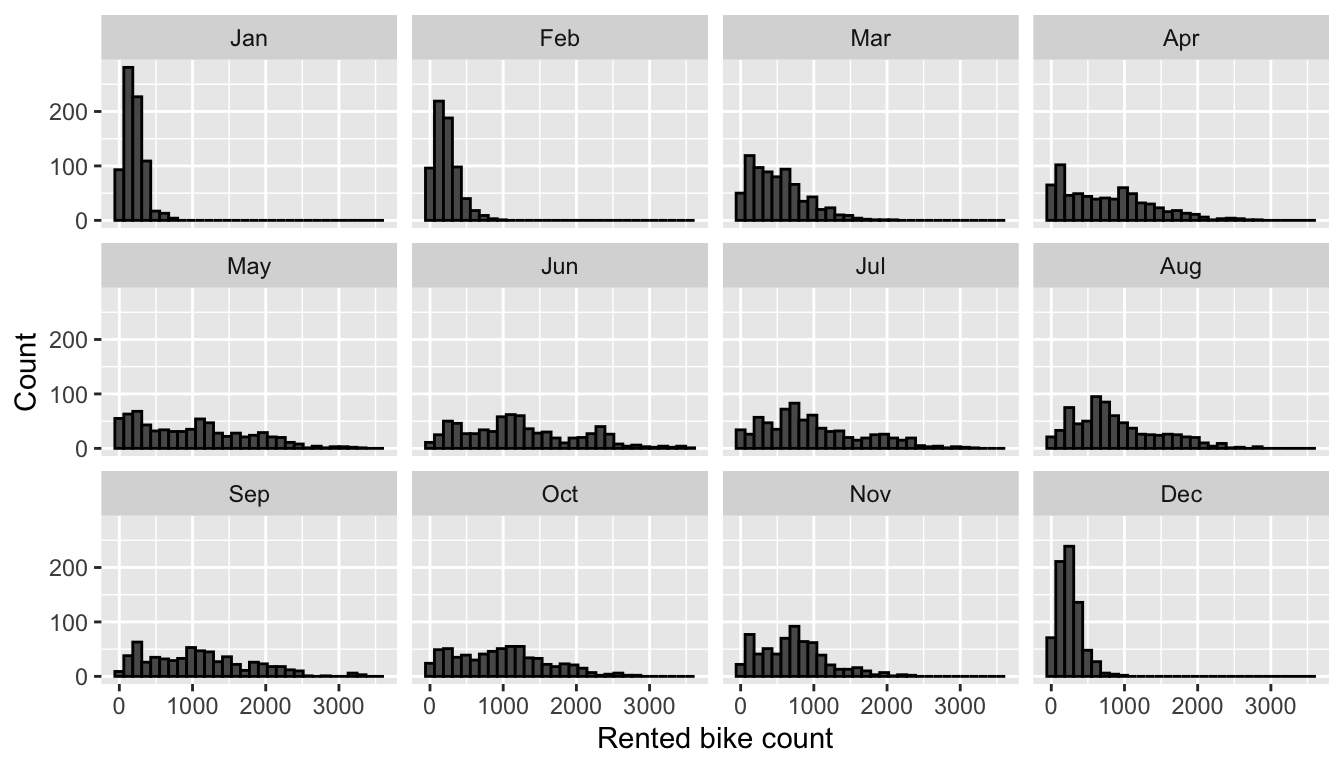

ggplot(data = bike, mapping = aes(x = rented_bike_count)) +

geom_histogram(colour = "black") +

labs(x = "Rented bike count", y = "Count") +

facet_wrap(~month)

Figure 4.2: Distribution of rented bike count by month.

In cold months (December, January, February), the distribution appears to be concentrated around low values. There seems to be monthly seasonality here.

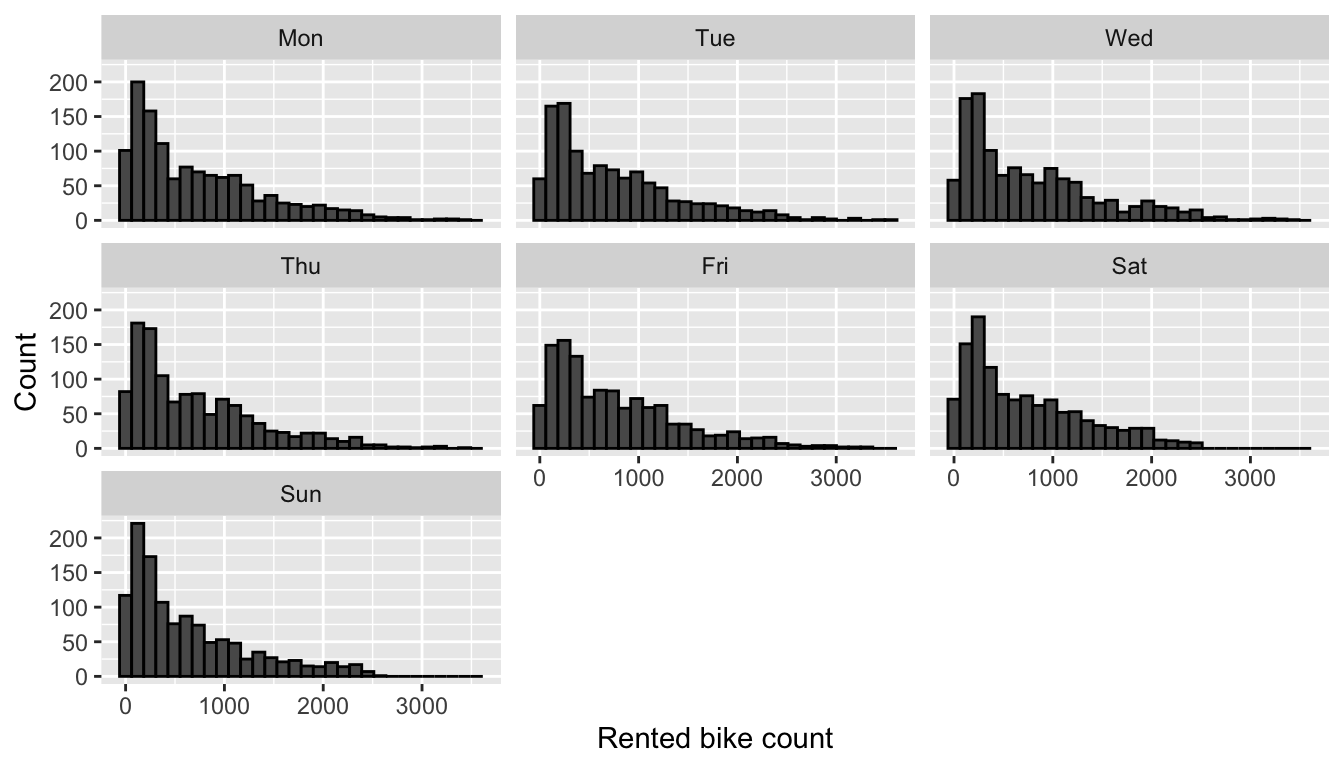

Let us look at the distribution of the number of bikes rented depending on the weekday:

ggplot(data = bike, mapping = aes(x = rented_bike_count)) +

geom_histogram(colour = "black") +

labs(x = "Rented bike count", y = "Count") +

facet_wrap(~week_day)

Figure 4.3: Distribution of rented bike count by weekday.

To the naked eye, there does not seem to be a specific link between the day of the week and the number of bicycles rented.

Somme summary statistics depending on the weekday can be obtained using the tableby() function from {arsenal}.

library(arsenal)

tableby_control <- tableby.control(

numeric.stats=c("Nmiss", "meansd", "median", "q1q3")

)

tab <- tableby(week_day~rented_bike_count, data = bike,

control = tableby_control)

summary(tab, text = NULL) %>%

kableExtra::kable(

caption = "Rented bike count depending on the week days.", booktabs = T,

format = "latex", longtable = TRUE) %>%

kableExtra::kable_classic(html_font = "Cambria") %>%

kableExtra::kable_styling(

bootstrap_options = c("striped", "hover", "condensed",

"responsive", "scale_down"),

font_size = 5)The ANOVA test result reported in the previous table lead us to think that the mean is not the same across all weekdays. We notice that the number of rented bikes is relatively lower on Sundays and relatively higher on Fridays.

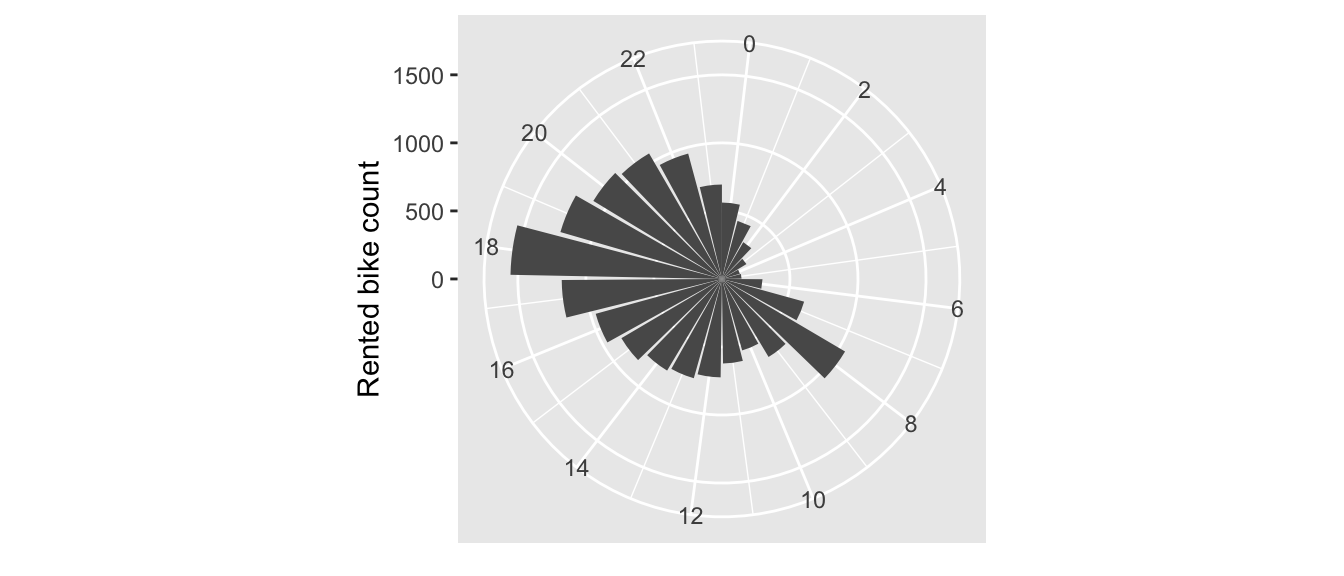

To have an idea of the distribution of the target variable conditional on the hour, we can use polar coordinates:

bike %>%

group_by(hour) %>%

summarise(rented_bike_count = mean(rented_bike_count)) %>%

ggplot(data = ., aes(x = hour, y = rented_bike_count)) +

geom_bar(stat = "identity") +

coord_polar() +

labs(x = NULL, y = "Rented bike count") +

scale_x_continuous(breaks = seq(0,24, by = 2))

Figure 4.4: Rented bike count per hour.

As one could expect, the number of bikes rented during the night are much lower that during the day. Two peaks are observed: around 8am and around 6pm, i.e., at the beginning and end of the working day.

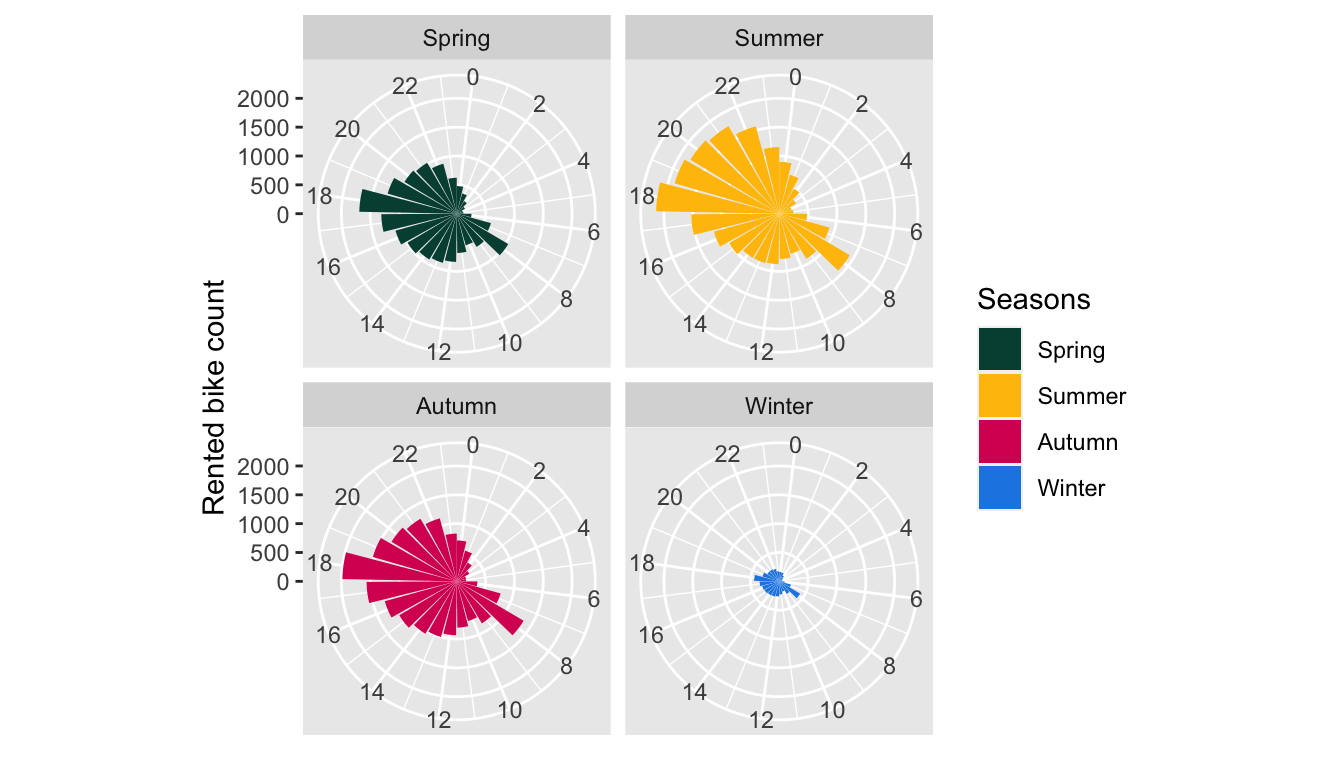

We can also look at the distribution of the number of rented bikes depending on the seasons:

bike %>%

group_by(hour, seasons) %>%

summarise(rented_bike_count = mean(rented_bike_count)) %>%

ggplot(data = ., aes(x = hour, y = rented_bike_count)) +

geom_bar(stat = "identity", mapping = aes(fill = seasons)) +

coord_polar() +

labs(x = NULL, y = "Rented bike count") +

scale_x_continuous(breaks = seq(0,24, by = 2)) +

facet_wrap(~seasons) +

scale_fill_manual("Seasons",

values = c("Spring" = "#004D40", "Summer" = "#FFC107",

"Autumn" = "#D81B60", "Winter" = "#1E88E5"))

Figure 4.5: Rented bike count per hour and per season.

As previously seen, the number of rented bikes is much lower during Winter. The peaks at 8am and 6pm are observed regardless the seasons.

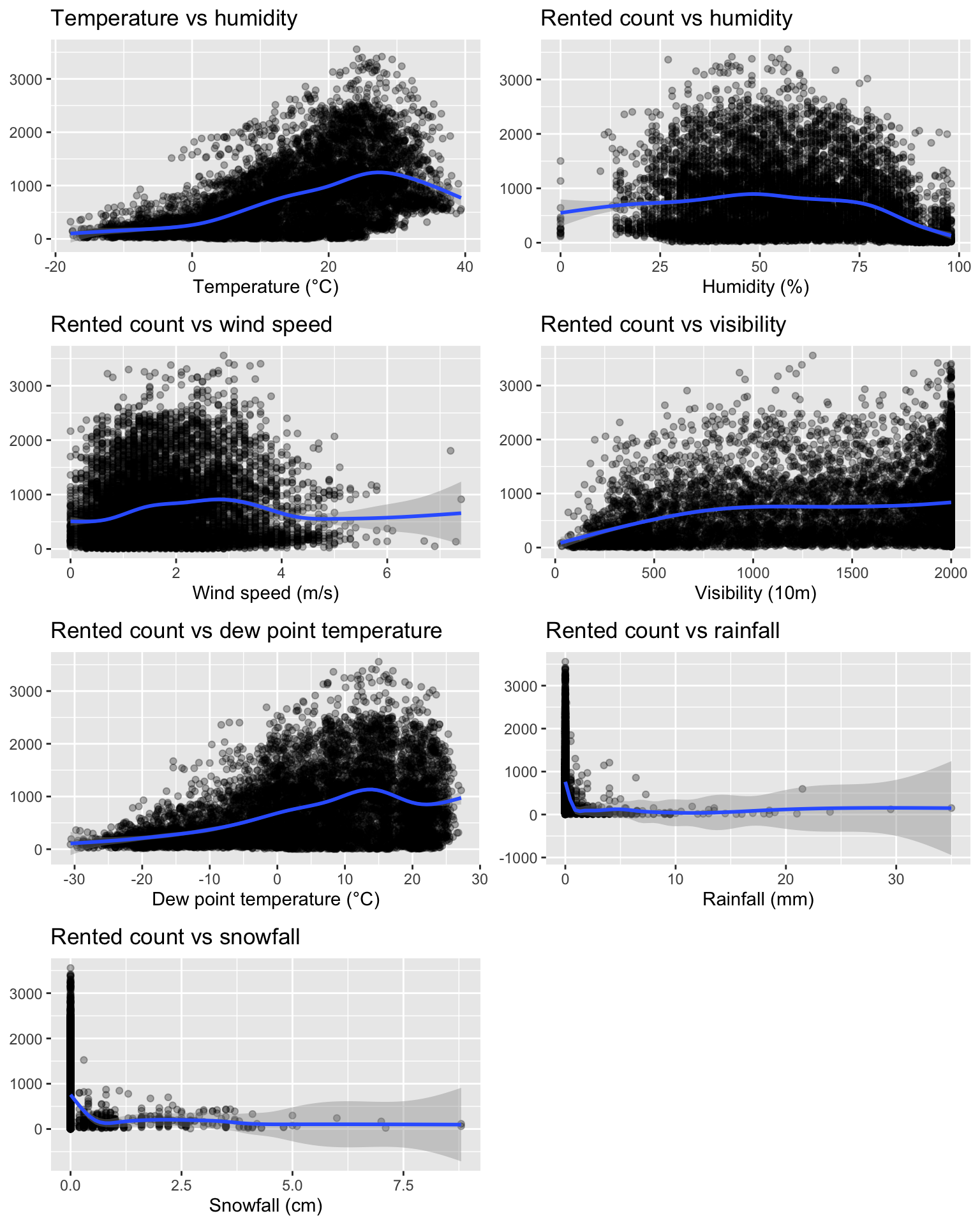

Now, using scatter plots, let us explore the relationship between the number of bikes rented and each weather variable. Let us first create each plot and store everyone of them in a different object.

library(cowplot)

p_temp <-

ggplot(data = bike,

mapping = aes(x = temperature, y = rented_bike_count)) +

geom_point(alpha = .3) +

geom_smooth() +

labs(x = "Temperature (°C)", y = NULL,

title = "Temperature vs humidity")

p_humidity <-

ggplot(data = bike,

mapping = aes(x = humidity, y = rented_bike_count)) +

geom_point(alpha = .3) +

geom_smooth() +

labs(x = "Humidity (%)", y = NULL,

title = "Rented count vs humidity")

p_wind <-

ggplot(data = bike,

mapping = aes(x = wind_speed, y = rented_bike_count)) +

geom_point(alpha = .3) +

geom_smooth() +

labs(x = "Wind speed (m/s)", y = NULL,

title = "Rented count vs wind speed")

p_visibility <-

ggplot(data = bike,

mapping = aes(x = visibility, y = rented_bike_count)) +

geom_point(alpha = .3) +

geom_smooth() +

labs(x = "Visibility (10m)", y = NULL,

title = "Rented count vs visibility")

p_dew_point <-

ggplot(data = bike,

mapping = aes(x = dew_point_temperature, y = rented_bike_count)) +

geom_point(alpha = .3) +

geom_smooth() +

labs(x = "Dew point temperature (°C)", y = NULL,

title = "Rented count vs dew point temperature")

p_solar_radiation <-

ggplot(data = bike,

mapping = aes(x = solar_radiation, y = rented_bike_count)) +

geom_point(alpha = .3) +

geom_smooth() +

labs(x = "Solar Radiation (MJ/m2)", y = NULL,

title = "Rented count vs solar radiation")

p_rainfall <-

ggplot(data = bike,

mapping = aes(x = rainfall, y = rented_bike_count)) +

geom_point(alpha = .3) +

geom_smooth() +

labs(x = "Rainfall (mm)", y = NULL,

title = "Rented count vs rainfall")

p_snowfall <-

ggplot(data = bike,

mapping = aes(x = snowfall, y = rented_bike_count)) +

geom_point(alpha = .3) +

geom_smooth() +

labs(x = "Snowfall (cm)", y = NULL,

title = "Rented count vs snowfall")Then, using the plot_grid() function from {cowplot}, we can plot all these graphs on a single figure:

cowplot::plot_grid(p_temp, p_humidity, p_wind,

p_visibility, p_dew_point, p_rainfall,

p_snowfall, ncol = 2)

Figure 4.6: Relationship between the rented count and the numeric predictors.

The plot show nonlinear relationships between the weather and the number of rented bikes. One should note that these graphs do not allow to look at interaction effects.

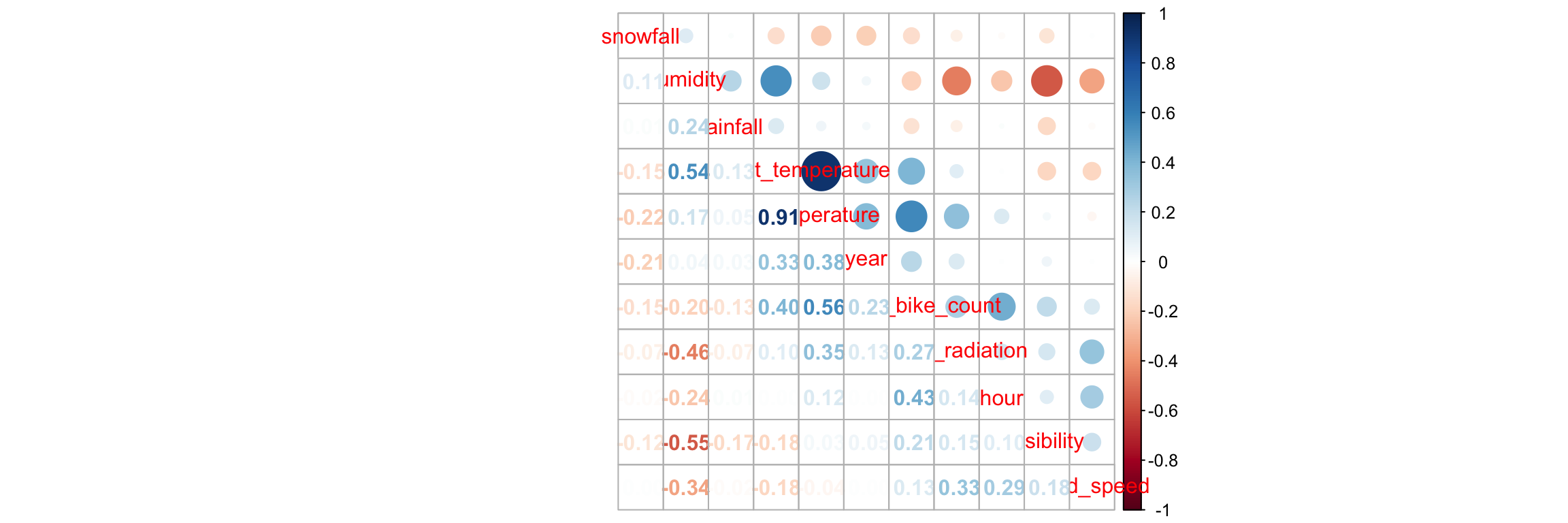

Is there any correlation between the numerical variables of the dataset? Let us have a look.

correl_mat <-

bike %>%

select_if(is.numeric) %>%

cor() %>%

round(2)

correl_mat## rented_bike_count hour temperature humidity wind_speed

## rented_bike_count 1.00 0.43 0.56 -0.20 0.13

## hour 0.43 1.00 0.12 -0.24 0.29

## temperature 0.56 0.12 1.00 0.17 -0.04

## humidity -0.20 -0.24 0.17 1.00 -0.34

## wind_speed 0.13 0.29 -0.04 -0.34 1.00

## visibility 0.21 0.10 0.03 -0.55 0.18

## dew_point_temperature 0.40 0.00 0.91 0.54 -0.18

## visibility dew_point_temperature solar_radiation rainfall

## rented_bike_count 0.21 0.40 0.27 -0.13

## hour 0.10 0.00 0.14 0.01

## temperature 0.03 0.91 0.35 0.05

## humidity -0.55 0.54 -0.46 0.24

## wind_speed 0.18 -0.18 0.33 -0.02

## visibility 1.00 -0.18 0.15 -0.17

## dew_point_temperature -0.18 1.00 0.10 0.13

## snowfall year

## rented_bike_count -0.15 0.23

## hour -0.02 0.00

## temperature -0.22 0.38

## humidity 0.11 0.04

## wind_speed 0.00 0.00

## visibility -0.12 0.05

## dew_point_temperature -0.15 0.33

## [ getOption("max.print") est atteint -- 4 lignes omises ]It may be more convenient to construct a correlation plot (we can do so here as the number of predictors is not too large).

library(corrplot)

corrplot.mixed(correl_mat, order = 'AOE')

Figure 4.7: Correlation plot.

A nice summary table can also be constructed.

tableby_control <- tableby.control(

numeric.stats=c("Nmiss", "meansd", "range", "median", "q1q3")

)

tab <- tableby(~., data = bike, control = tableby_control)

summary(tab, text = NULL) %>%

kableExtra::kable(caption = "Summary statistics.", booktabs = T,

format = "latex", longtable = TRUE) %>%

kableExtra::kable_classic(full_width = F, html_font = "Cambria") %>%

kableExtra::kable_styling(

bootstrap_options = c("striped", "hover", "condensed", "responsive"),

font_size = 6)For the sake of the illustration, let us create a binary outcome variable. Let us imagine that, for example, the service is too costly if the number of bikes rented per day is below 300. In such a case, we might want to train a classifier which will predict whether or not the number of bikes rented per day will be below or above that threshold.

bike <-

bike %>%

mutate(y_binary = ifelse(rented_bike_count < 300, "Low", "High"))Let us look at the number and proportions of observations in each category:

table(bike$y_binary)##

## High Low

## 5526 2939prop.table(table(bike$y_binary))##

## High Low

## 0.6528057 0.3471943We can also recreate the summary table depending on the binary outcome variable:

tableby_control <- tableby.control(

numeric.stats=c("Nmiss", "meansd", "range", "median", "q1q3")

)

tab <- tableby(y_binary~., data = bike, control = tableby_control)

summary(tab, text = NULL) %>%

kableExtra::kable(

caption = "Summary statistics depending on the binary response variable.",

booktabs = T,

format = "latex", longtable = TRUE) %>%

kableExtra::kable_classic(full_width = F, html_font = "Cambria") %>%

kableExtra::kable_styling(

bootstrap_options = c("striped", "hover", "condensed", "responsive"),

font_size = 6)Now that we are a bit more familiar with the data, let us dig into the core subject of this notebook.

4.2 Training and Test Sets

Let us create a training dataset and a test dataset. We will put 80% of the first observations in the training set and the remaining 20% in the test set. Although we will not explore the time series aspect of the data at first, let us prepare the training and test sets such that the data in the test set will be those at the end of the sample period.

First, we need to make sure that the table is sorted by ascending values of date and then by ascending values of hour:

bike <-

bike %>%

arrange(date, hour)Then the two sets can be created:

n_train <- round(.8*nrow(bike))

# Training set

df_train <-

bike %>%

slice(1:n_train)

# Test set

df_test <-

bike %>%

slice(-(1:n_train))Let us look at the dimensions:

dim(df_train)## [1] 6772 17dim(df_test)## [1] 1693 174.3 Decision Trees

In this first part, we will try to predict the number of rented bikes with a regression tree and then we will try to predict the binary outcome variable using a classification tree. The method we will used is called Classification and Regression Trees (CART) and was introduced in L. Breiman et al. (1984).

We will rely on {rpart} to build the tree and on {rpart.plot} to create graphical illustrations (whenever it is possible to do so).

library(rpart)

library(rpart.plot)4.3.1 Regression Trees

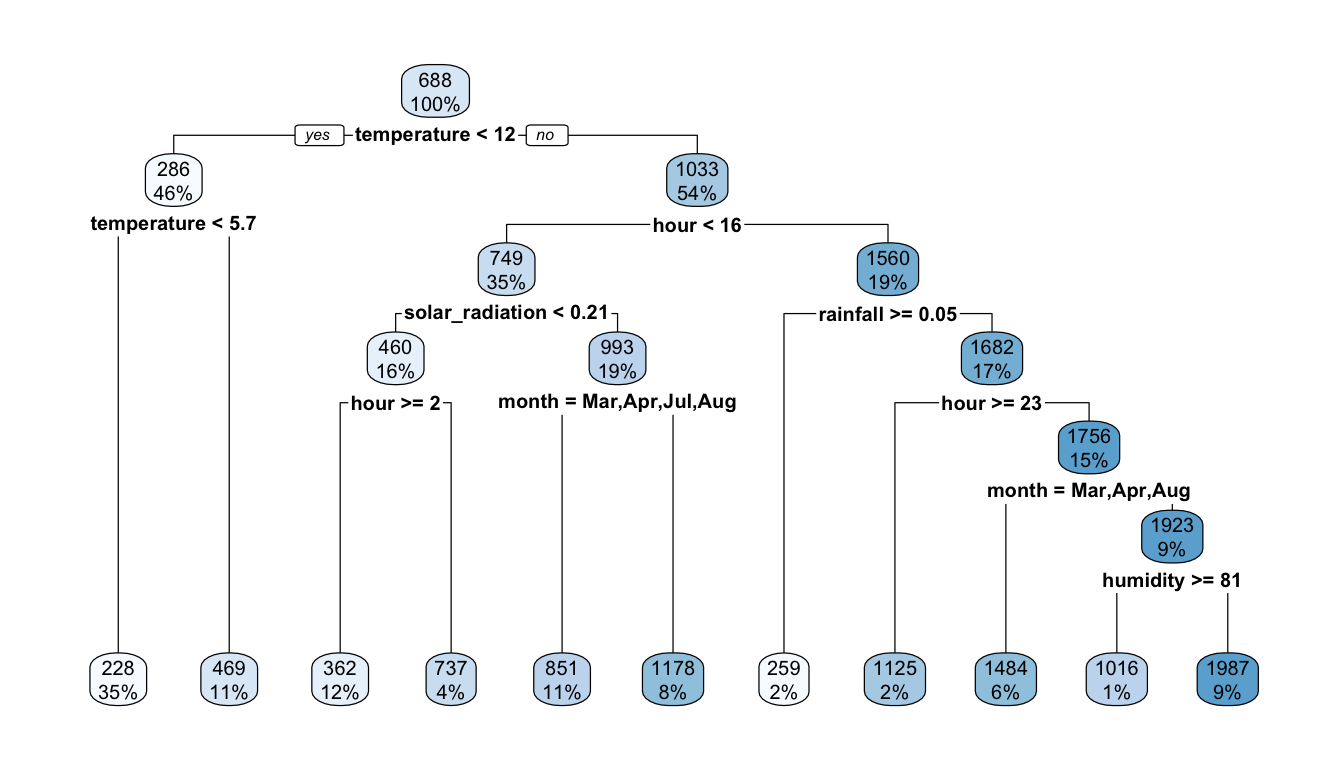

To predict the number of hourly rented bikes, the predictor space will be segmented into a number of simple regions. To create these segments, the algorithm creates a series of binary splits. The data are recursively split into terminal nodes (leaves). Let us illustrate this with our data.

part_tree <-

rpart(rented_bike_count ~.,

data = df_train %>% select(-y_binary, -date),

method = "anova")The rules that were learnt by the algorithm are the following

part_tree## n= 6772

##

## node), split, n, deviance, yval

## * denotes terminal node

##

## 1) root 6772 2825639000 687.5492

## 2) temperature< 12.15 3131 199783800 285.6675

## 4) temperature< 5.65 2383 64996140 228.1637 *

## 5) temperature>=5.65 748 101804000 468.8650 *

## 3) temperature>=12.15 3641 1685319000 1033.1390

## 6) hour< 15.5 2365 518621400 748.7455

## 12) solar_radiation< 0.205 1084 114108200 460.0489

## 24) hour>=1.5 801 61201670 362.2322 *

## 25) hour< 1.5 283 23550280 736.9081 *

## 13) solar_radiation>=0.205 1281 237713700 993.0445

## 26) month=Mar,Apr,Jul,Aug 723 92311480 850.5007 *

## 27) month=May,Jun,Sep 558 111677400 1177.7380 *

## 7) hour>=15.5 1276 620889500 1560.2470

## 14) rainfall>=0.05 109 19285900 259.2018 *

## 15) rainfall< 0.05 1167 399864100 1681.7670

## 30) hour>=22.5 137 11867380 1124.7230 *

## 31) hour< 22.5 1030 339831500 1755.8590

## 62) month=Mar,Apr,Aug 392 86211860 1483.6530 *

## 63) month=May,Jun,Jul,Sep 638 206727700 1923.1080

## 126) humidity>=80.5 42 10854010 1015.6900 *

## 127) humidity< 80.5 596 158853500 1987.0540 *They can be visualised using a tree:

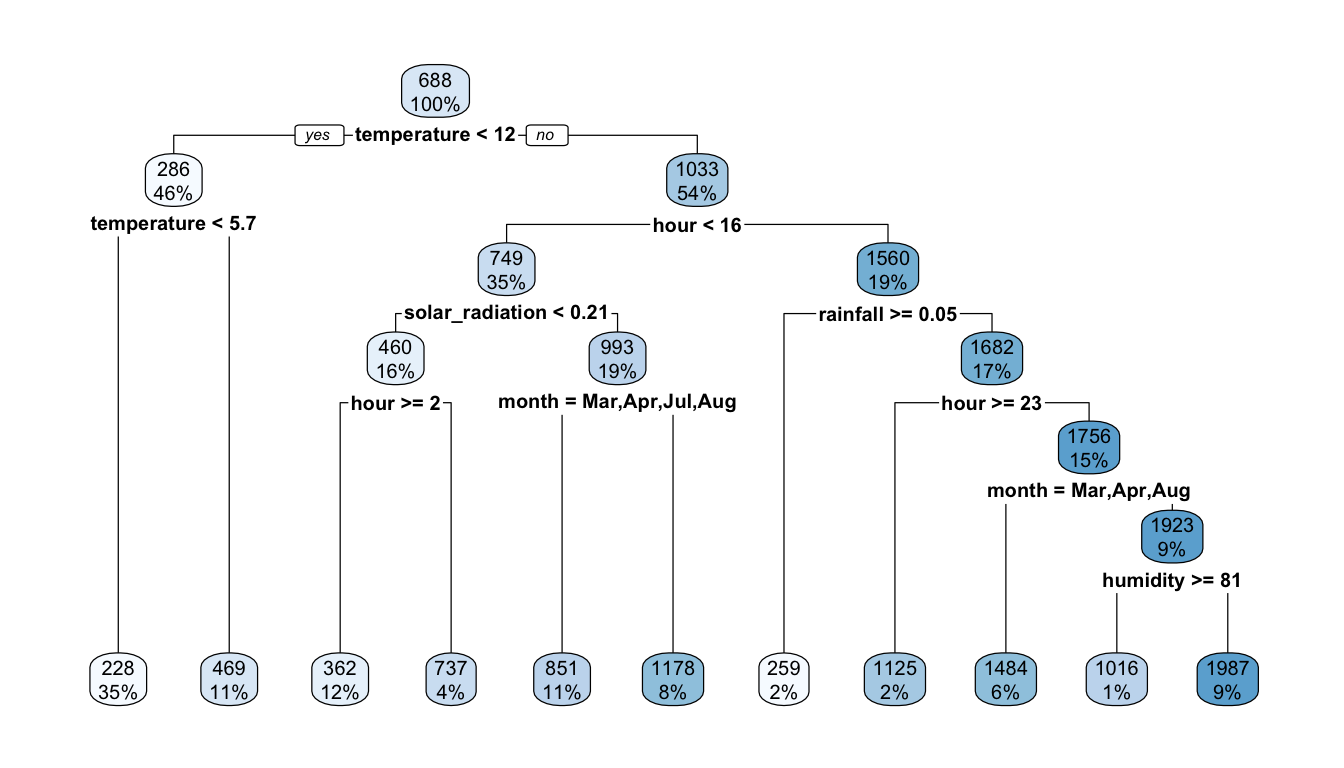

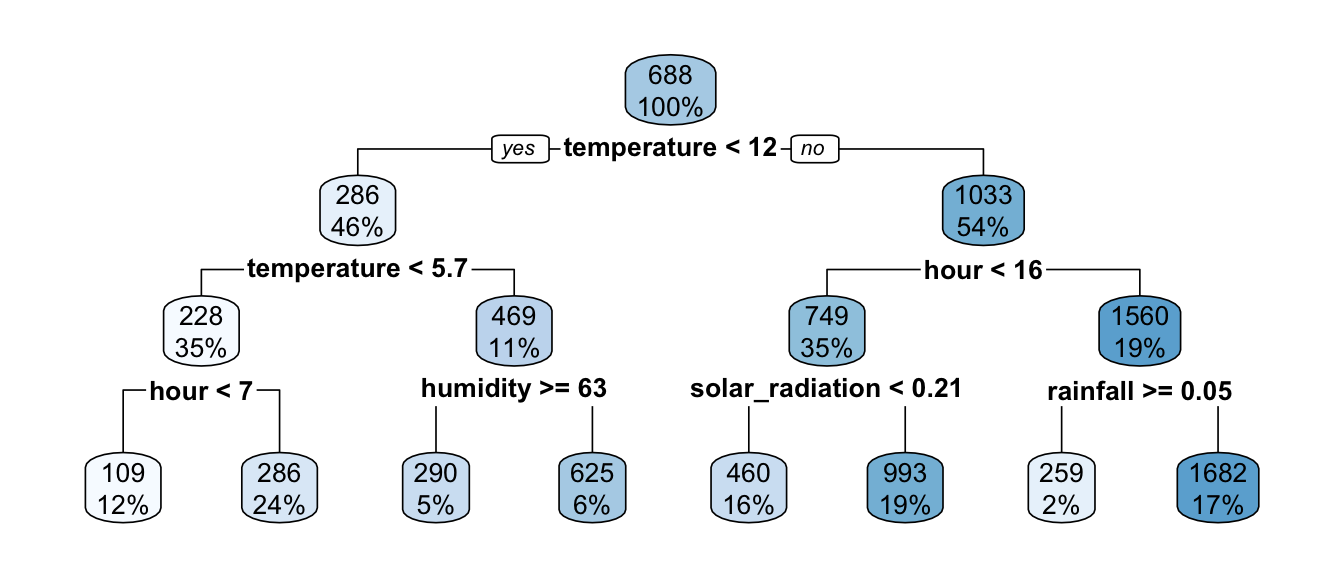

rpart.plot(part_tree)

Figure 4.8: A first decision tree.

The first rule appears on top of the tree. Among all the tested separating rules, the one involving the variable temperature with a threshold of 12 is the one that minimises the residual sum of square (RSS). In other words, this couple of variable/cutoff value is the one that minimises deviations to the mean (variances) in each resulting leaf.

On the root node (top of the graph), the bubble shows two values:

688: this corresponds to the average value of the response variable for the observations in the node (here, the average for all observations)100%: this percentage corresponds to the number of observation from the whole dataset that can be found in that node.

mean(df_train$rented_bike_count)## [1] 687.5492The first variable used to perform a split is temperature. Observations with a value for the variable temperature strictly lower than 12.15 (46%) will go to the left leaf. The others (the remaining 54%) will go to the right leaf.

Among the observations for which temperature is strictly lower than 12.15, the average of the response variable is equal to 286 (once rounded):

df_train %>%

filter(temperature < 12.15) %>%

summarise(mean = mean(rented_bike_count))## # A tibble: 1 × 1

## mean

## <dbl>

## 1 286.In that node, another split is performed, using the temperature variable once again, and the cutoff value that lead to the lowest RSS within that node is 5.65. Hence, observations for which the temperature is lower than 12.15 and lower than 5.65 (35% of all observations of the dataset) will go to the left leaf, while those for which the the temperature is lower than 12.15 and greater than 5.65 (11% of all observations of the dataset) will go to the right leaf.

df_train %>%

filter(temperature < 12.15) %>%

filter(temperature < 5.65) %>%

count() %>%

mutate(prop = n / nrow(df_train))## # A tibble: 1 × 2

## n prop

## <int> <dbl>

## 1 2383 0.352We end up in a final node. The predicted value for such observations will be the average of the response variable in this node:

df_train %>%

filter(temperature < 12.15) %>%

filter(temperature < 5.65) %>%

summarise(pred = mean(rented_bike_count))## # A tibble: 1 × 1

## pred

## <dbl>

## 1 228.And on the right node:

df_train %>%

filter(temperature < 12.15) %>%

filter(temperature > 5.65) %>%

count() %>%

mutate(prop = n / nrow(df_train))## # A tibble: 1 × 2

## n prop

## <int> <dbl>

## 1 748 0.110df_train %>%

filter(temperature < 12.15) %>%

filter(temperature > 5.65) %>%

summarise(pred = mean(rented_bike_count))## # A tibble: 1 × 1

## pred

## <dbl>

## 1 469.Let us detail the process of selecting the variable/cutoff pair. We can create a simple function to help compute the residual sum of squares:

compute_rss <- function(observed, predicted){

sum((observed-predicted)^2)

}Before making any split, the RSS is equal to:

rss_init <-

compute_rss(df_train$rented_bike_count,

mean(df_train$rented_bike_count))

rss_init## [1] 2825639089Let us consider the variable temperature used to partition the data, and a threshold of 12.15. Note that this variable is numerical. We will illustrate next how to procede with categorical data.

variable_split <- "temperature"

threshold <- 12.15We split the data into two subsamples:

- one for which

temperatureis below 12.15 - another one for which

temperatureis greater than or equal to 12.15.

In each subset, we compute the average of the response variable: this will be the prediction made in the node:

tmp_data <-

df_train %>%

mutate(below_t = temperature < 12.15) %>%

select(rented_bike_count, below_t) %>%

group_by(below_t) %>%

mutate(pred = mean(rented_bike_count))

tmp_data## # A tibble: 6,772 × 3

## # Groups: below_t [2]

## rented_bike_count below_t pred

## <dbl> <lgl> <dbl>

## 1 254 TRUE 286.

## 2 204 TRUE 286.

## 3 173 TRUE 286.

## 4 107 TRUE 286.

## 5 78 TRUE 286.

## 6 100 TRUE 286.

## 7 181 TRUE 286.

## 8 460 TRUE 286.

## 9 930 TRUE 286.

## 10 490 TRUE 286.

## # … with 6,762 more rowsWe can then compute the RSS :

rss_after_split <-

compute_rss(tmp_data$rented_bike_count,

tmp_data$pred)

rss_after_split## [1] 1885102486The improvement due to the split, in terms of percentage deviation of the RSS is given by:

-(rss_after_split - rss_init)/rss_init## [1] 0.332858It is the value reported in the split element of the object returned by rpart():

head(part_tree$splits)## count ncat improve index adj

## temperature 6772 -1 0.3328580 12.15 0.0000000

## month 6772 12 0.2719021 1.00 0.0000000

## seasons 6772 4 0.2395872 2.00 0.0000000

## dew_point_temperature 6772 -1 0.2132190 3.55 0.0000000

## hour 6772 -1 0.1572311 6.50 0.0000000

## dew_point_temperature 0 -1 0.9162729 5.25 0.8189077Now, let us wrap-up this code in a function, so that it is easy to make the threshold vary:

#' @param data data frame

#' @param variable_to_predict name of the variable to predict

#' @param variable_split name of the variable used to create the partitions

#' @param threshold threshold used to create the two partitions

rss_split_numeric <-

function(data, variable_to_predict,

variable_split, threshold){

tmp_data <-

data %>%

mutate(below_t = !!sym(variable_split) < threshold) %>%

select(!!sym(variable_to_predict), below_t) %>%

group_by(below_t) %>%

mutate(pred = mean(!!sym(variable_to_predict)))

compute_rss(tmp_data[[variable_to_predict]], tmp_data[["pred"]])

}An example with the same variable (temperature) and threshold (12.15) as earlier:

rss_split_numeric(

data = df_train, variable_to_predict = "rented_bike_count",

variable_split = "temperature", threshold = 12.15)## [1] 1885102486All that we need to to is to make the threshold vary. For example, we can consider values ranging from the minimum of the splitting variable to its maximum, so that 1000 different threshold values are tested:

number_of_cuts <- 1000

thresholds <-

seq(min(df_train$temperature), max(df_train$temperature),

length.out = number_of_cuts)Then, we can loop over these threshold values. At each iteration, we can store the RSS in a vector.

rss_tmp <- rep(NA, number_of_cuts)

for(i in 1:number_of_cuts){

rss_tmp[i] <-

rss_split_numeric(

data = df_train, variable_to_predict = "rented_bike_count",

variable_split = "temperature", threshold = thresholds[i])

}After a few seconds, once the loop has ended, we can look at the threshold value for which the RSS was the lowest:

thresholds[which.min(rss_tmp)]## [1] 12.14555We find the same value as that shown on top of the tree!

If we compute the average value of the number of rented bikes in each partition:

df_train %>%

mutate(below_t = temperature < thresholds[which.min(rss_tmp)]) %>%

select(rented_bike_count, below_t) %>%

group_by(below_t) %>%

summarise(pred = mean(rented_bike_count),

n = n()) %>%

ungroup() %>%

mutate(prop = round(100*n/sum(n)))## # A tibble: 2 × 4

## below_t pred n prop

## <lgl> <dbl> <int> <dbl>

## 1 FALSE 1033. 3641 54

## 2 TRUE 286. 3131 46We get the values shown on top of the children nodes.

Then, we need to consider all variables as the variable used to partition the data (not only temperature). We will not do it here, but I am sure that you understood the process of selecting the splitting rule. What you may be now wondering, is how to proceed if the variable used to partition the data is not numerical, but categorical. In such a case, we just need to consider the combination of binary splits. For example, let us consider the variable month. There are \(l=12\) months in the dataset, so that makes \(2^{(l - 1)} - 1 = 2047\) possible binary splits:

- (Jan) vs (Feb or Mar or Apr or May or Jun or Jul or Aug or Sep or Oct or Nov or Dec)

- (Jan or Feb) vs (Mar or Apr or May or Jun or Jul or Aug or Sep or Oct or Nov or Dec)

- (Jan or Mar) vs (Feb or Apr or May or Jun or Jul or Aug or Sep or Oct or Nov or Dec)

- …

- (Jan or Feb or Mar) vs (Apr or May or Jun or Jul or Aug or Sep or Oct or Nov or Dec)

- …

- (Jan or Feb or Mar or Apr or May or Jun or Jul or Aug or Sep or Oct or Nov) vs (Dec).

We can define a simple function that will give us all possible ways of creating unique binary partitions from a set of classes.

#' @param classes vector of names of classes

possible_splits <- function(classes){

l <- length(classes)

if(l>2){

resul <- resul <- rep(NA, 2^(l-1) - 1)

i <- 1

for(k in 1:(l-1)){

tmp <- combn(c(classes), k)

for(j in 1:ncol(tmp)){

if(tmp[1, j] != classes[1]) break

current <- str_c(tmp[,j], collapse = ",")

resul[i] <- str_c(tmp[,j], collapse = ",")

i <- i+1

}

}

}else{

# If only two classes

resul <- classes[1]

}

resul

}This function just needs to be fed with a vector of classes. For example, if the classes are A, B, C, D, there will be \(2^{(4 - 1)} - 1=7\) unique ways to partition the data into two areas.

possible_splits(c("A", "B", "C", "D"))## [1] "A" "A,B" "A,C" "A,D" "A,B,C" "A,B,D" "A,C,D"Let us illustrate how the partition is done on the tree by moving to one of the specific node: the one concerning observations for which temperature is greater than or equal to 12.15, the hour is lower than 15.5, the solar radiation is greater than or equal to 0.205.

Recall the plot:

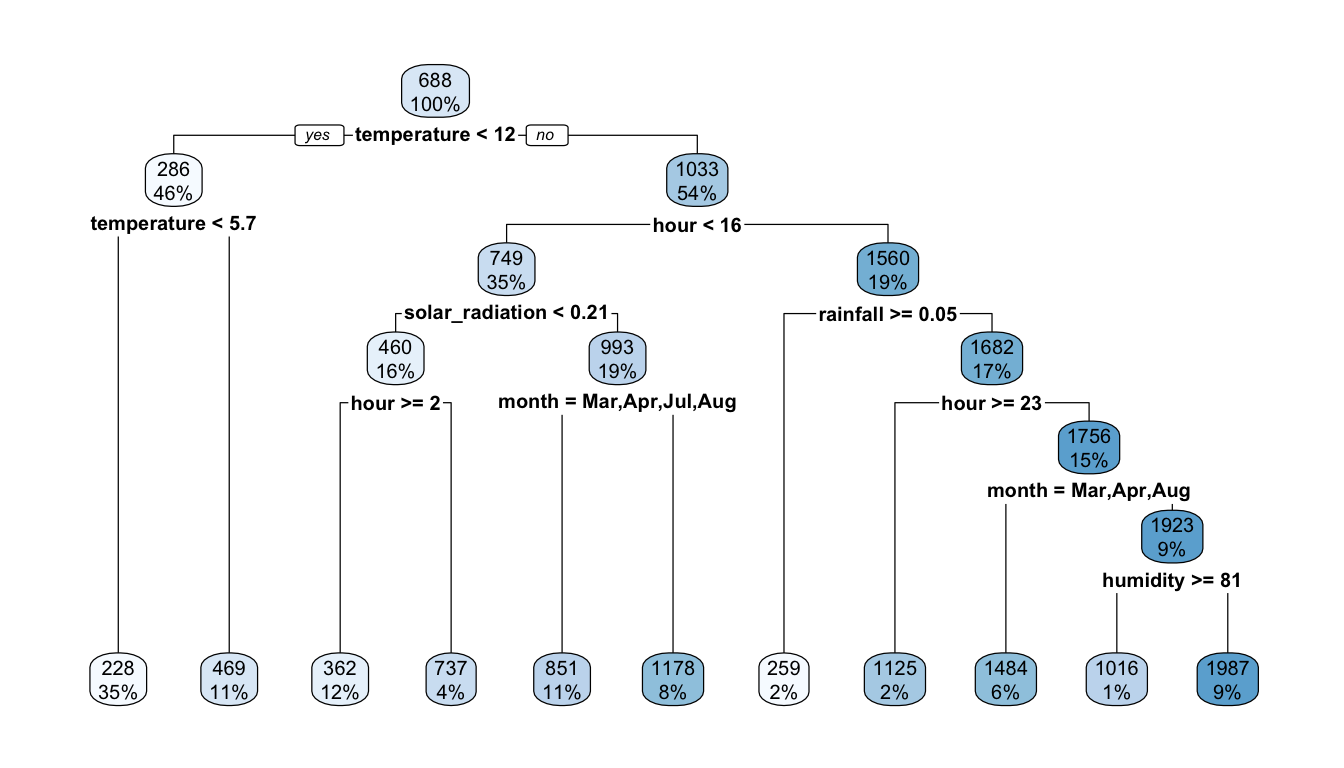

rpart.plot(part_tree)

Figure 4.9: The same first decision tree.

df_tmp <-

df_train %>%

filter(temperature >= 12.15 & hour < 15.5 & solar_radiation >= 0.205)The next partition, according to the graph, is done by separating observations for which the month is either March, April, July, or August from other observations. Let us try to retrieve such a result by hand.

At this node, we are left with 1281 observations:

nrow(df_tmp)## [1] 1281The different levels (or classes) of the month variable are the following:

classes <- as.character(unique(df_tmp$month))

classes## [1] "Mar" "Apr" "May" "Jun" "Jul" "Aug" "Sep"We note that there are less than 12 months in the dataset at this point. The number of classes is thus lower and the number of possible splits will thus be much smaller than 2047.

We can use our little function to obtain the list of possible splits:

list_possible_splits <- possible_splits(classes)

head(list_possible_splits)## [1] "Mar" "Mar,Apr" "Mar,May" "Mar,Jun" "Mar,Jul" "Mar,Aug"There are actually 7 classes, so there are 63 possible splits:

length(list_possible_splits)## [1] 63Let us consider the following split:

list_possible_splits[2]## [1] "Mar,Apr"All we need to do is to create a variable that will state whether the month is either in March or in April, i.e., either in one of the elements of the following vector:

str_split(list_possible_splits[2], ",")[[1]]## [1] "Mar" "Apr"Then, we can group the observations by this dummy variable and compute the average of the response variable in each subset. This average will be the prediction made at this node, if we use (March or April) as the decision rule.

tmp_data <-

df_tmp %>%

mutate(var_in_categ = month %in%

str_split(list_possible_splits[2],",")[[1]])%>%

select(rented_bike_count, var_in_categ) %>%

group_by(var_in_categ) %>%

mutate(pred = mean(rented_bike_count))We can check how many observations are in each subset:

table(tmp_data$var_in_categ)##

## FALSE TRUE

## 1065 216And we need to compute the RSS:

compute_rss(tmp_data[["rented_bike_count"]], tmp_data[["pred"]])## [1] 235207726Now, let us wrap-up this in a function, so that it can be used over a loop where the decision rule is changed at each iteration:

#' @param data data frame

#' @param variable_to_predict name of the variable to predict

#' @param variable_split name of the variable used to create the partitions

#' @param split_rule vector of classes used to create the two partitions

rss_split_categ <-

function(data, variable_to_predict,

variable_split, split_rule){

tmp_data <-

data %>%

mutate(var_in_categ = !!sym(variable_split) %in% split_rule) %>%

select(!!sym(variable_to_predict), var_in_categ) %>%

group_by(var_in_categ) %>%

mutate(pred = mean(!!sym(variable_to_predict)))

compute_rss(tmp_data[[variable_to_predict]], tmp_data[["pred"]])

}With the same rule as that used in the example, the function rss_split_categ() can be used as follows:

rss_split_categ(df_tmp,

variable_to_predict = "rented_bike_count",

variable_split = "month",

split_rule = str_split(list_possible_splits[2], ",")[[1]])## [1] 235207726Let us loop over all the possible splits, and store the RSS at each iteration.

rss_tmp <- rep(NA, length(list_possible_splits))

for(i in 1:length(list_possible_splits)){

rss_tmp[i] <-

rss_split_categ(

df_tmp,

variable_to_predict = "rented_bike_count",

variable_split = "month",

split_rule = str_split(list_possible_splits[i], ",")[[1]])

}The RSS is at its lowest for this variable with the following rule:

list_possible_splits[which.min(rss_tmp)]## [1] "Mar,Apr,Jul,Aug"Good news, we obtain the same result as that provided by the rpart() function! We can also check that the predictions in each subset and the proportion of observations are the same as that obtained with rpart().

df_tmp %>%

mutate(var_in_categ = month %in%

str_split(list_possible_splits[which.min(rss_tmp)],",")[[1]])%>%

select(rented_bike_count, var_in_categ) %>%

group_by(var_in_categ) %>%

summarise(pred = mean(rented_bike_count),

n = n()) %>%

mutate(prop = round(100*n/nrow(df_train)))## # A tibble: 2 × 4

## var_in_categ pred n prop

## <lgl> <dbl> <int> <dbl>

## 1 FALSE 1178. 558 8

## 2 TRUE 851. 723 11rpart.plot(part_tree)

Figure 4.10: The same (again) first decision tree.

To sum up, at each node, each variable is tested as a candidate to segment the input space into two parts. Multiple splits are tested for each variables. The final choice is the variable and the splitting rule leading to the lowest RSS value.

Once a split is done, the splitting process can be repeated in each partition. This process goes on recursively until some stopping criteria. All the variables are again considered as candidates, even the one that was used in the previous split.

4.3.2 Stopping the Recursive Splitting Process

The question that can then be asked is: what criteria should be used to decide to stop the recursive partitioning process?

There are multiple ways of stopping the algorithm. These ways are controlled by the arguments of the function rpart.control() from {rpart}. Some of them are the following:

maxdepth: we can set the maximum depth of the tree, with the root node counted as depth 0minsplit: we can decide that in order to make a split, there should be at least a minimum number of observations in a node ; if it is not the case, the split should not be attemptedminbucket: we can also decide that a split should not be performed if it leads to a leaf node with too few observations (by default, this value is set in R as 1/3 ofminsplit)cp: in the case of regression trees with anova splitting, if the split leads to an increase in the R-squared less thancpcompared to the previous value, then the split should not be done. Settingcp=0will lead to overlooking this criterion as a stopping criterion.

These arguments can be directly provided to the rpart() function. In the following example, a split will be attempted if there is at least 1,000 observation in the node for which the split is performed, and if it leads to partitions in which at least 500 observations are left in the leaf node. If attempting the split respects these two conditions, we will overlook the fact that the R-squared should be increased at the next step, by setting cp=-1.

part_tree <-

rpart(rented_bike_count ~.,

data = df_train %>% select(-y_binary, -date),

method = "anova",

minsplit = 1000,

minbucket = 500,

cp=0)

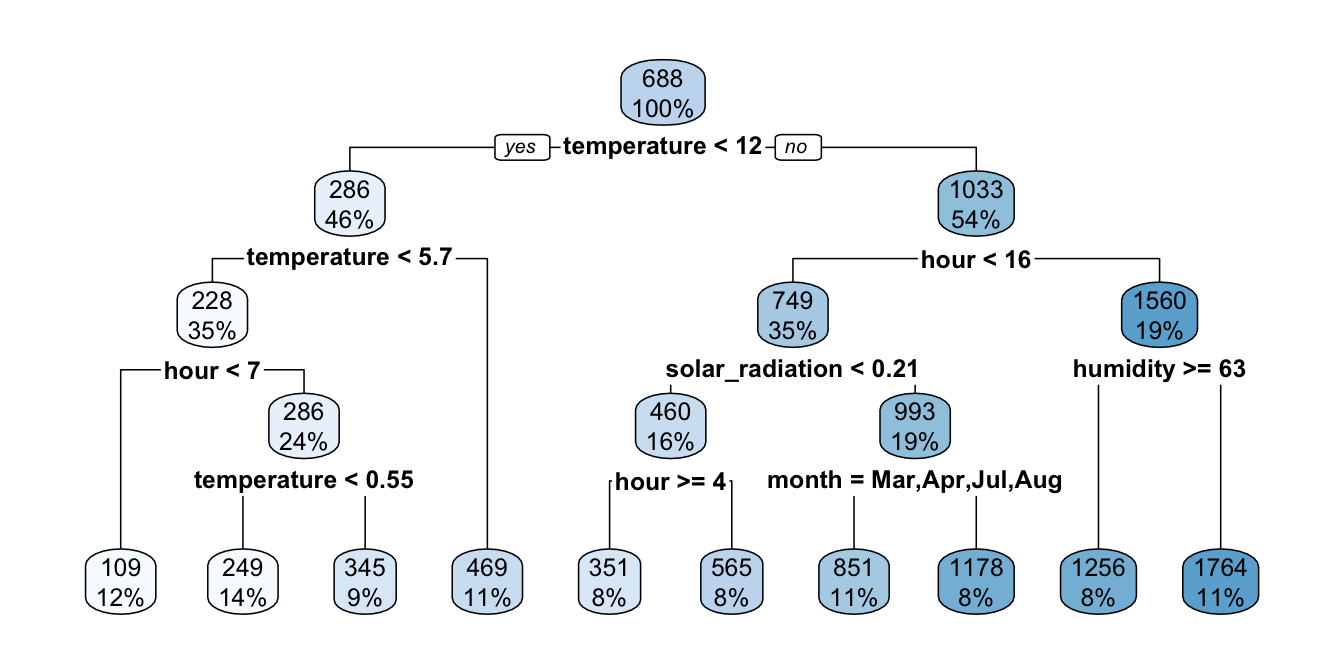

rpart.plot(part_tree)

Figure 4.11: Decision tree where splits are made if there is at least 1000 obs. in the node and if the number of obs. in the resulting leaves are at least 500.

If now we allow splits to be done with the same conditions but only if they lead to an increase in the R-squared value of 0.01 from one step to the next:

part_tree <-

rpart(rented_bike_count ~.,

data = df_train %>% select(-y_binary, -date),

method = "anova",

minsplit = 1000,

minbucket = 500,

cp=.01)

rpart.plot(part_tree)

Figure 4.12: Growing the tree stopped earlier as we imposed restrictions on the improvement needed to make split.

It can be noted that the tree is a bit shallower.

The tree grown has a depth of 4. Let us constrain the tree to have a maximum depth of only 3.

part_tree <-

rpart(rented_bike_count ~.,

data = df_train %>% select(-y_binary, -date),

method = "anova",

maxdepth = 3,

cp = 0)

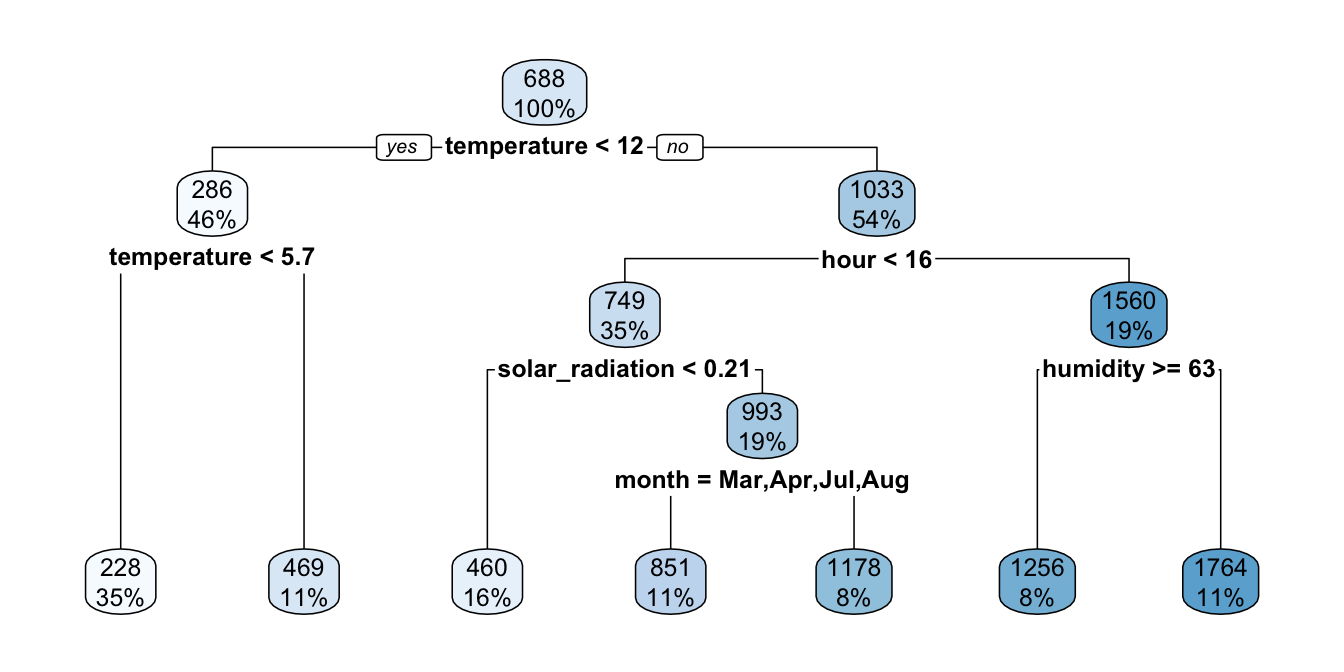

rpart.plot(part_tree)

Figure 4.13: Decision tree when constraining its depth.

The algorithm stopped earlier.

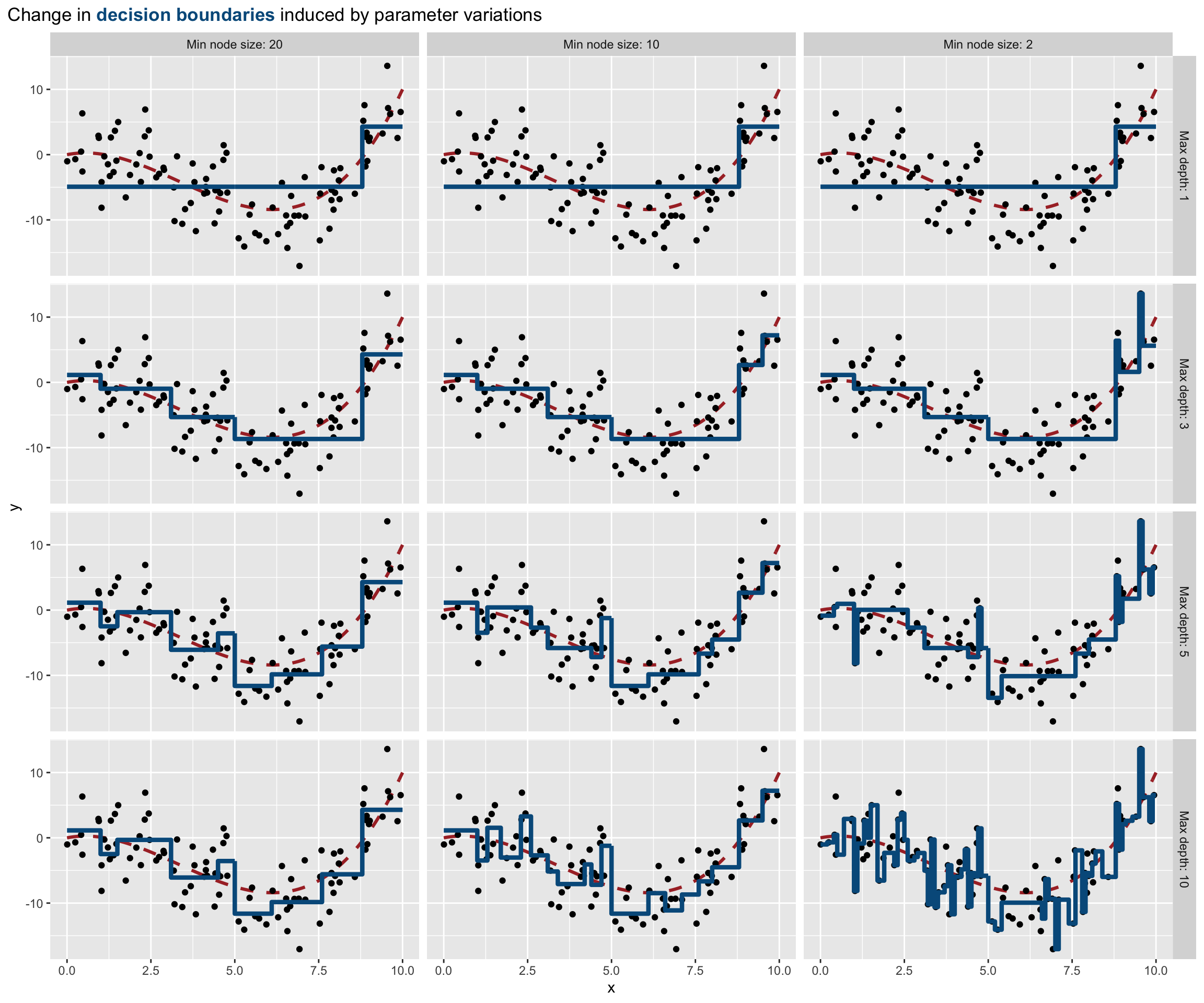

Let us spent a bit more time with the two parameters controlling the maximum depth of the tree and the minimum number of observations in a node for a split to be attempted. We can easily give the intuition that the higher the depth of the tree, the higher the risk of overfitting. In a same way, the lower the minimum number of observations in a node for a split to be attempted, the higher the risk of overfitting. Let us give an illustration with synthetic data. Let us assume that we observe 100 observations drawn from the following process:

\[y_i= .1x_i^3 -x_i^2+x_i + \varepsilon_i, \quad \varepsilon \sim \mathcal{N}(0,4).\]

set.seed(123)

n <- 100

x <- runif(n=n, min=0, max=10)

eps <- rnorm(n, 0, 4)

f <- function(x) .1*x^3-1*x^2+x

y <- f(x)+epsLet us plot the observed values and show with a red dotted line the expected value of data drawn from this data generating process.

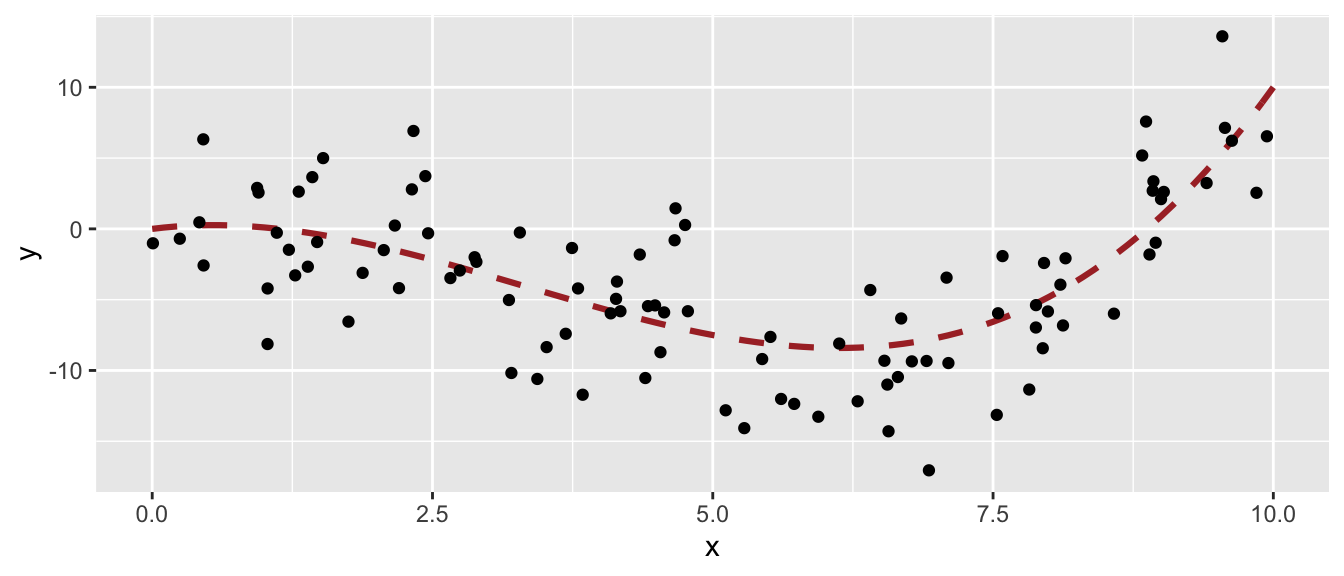

df_sim <- tibble(x=x, y=y)

ggplot(data = df_sim, mapping = aes(x = x, y = y)) +

geom_line(data = tibble(x=seq(0, 10, by = .1), y=f(x)),

size = 1.1, linetype = "dashed", colour = "#AA2F2F") +

geom_point()

Figure 4.14: Generating Data Process and generated data.

Now, let us train a regression tree on the observed values. Let us limit the depth of the tree to 1.

part_tree <-

rpart(y ~ x,

data = df_sim,

method = "anova",

maxdepth = 1,

cp = 0

)

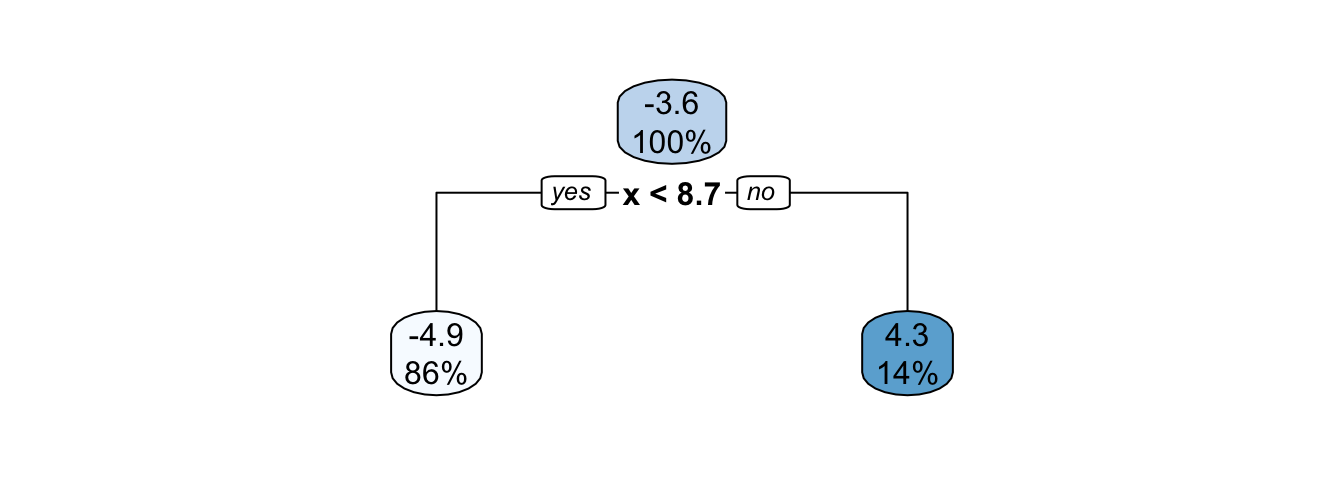

rpart.plot(part_tree)

Figure 4.15: Decision tree built on the synthetic data with a maximum depth of 1.

The decision boundary is such that if x is lower than 8.7, the predicted value will be -4.9; otherwise it will be 4.3.

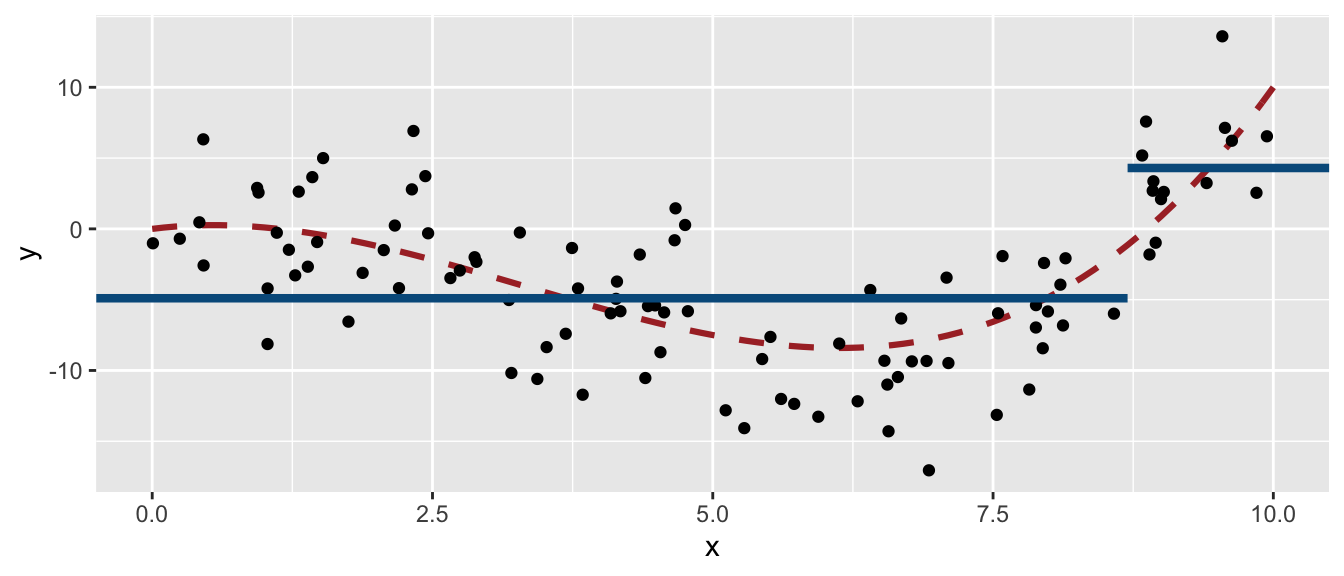

ggplot(mapping = aes(x = x, y = y)) +

geom_line(data = tibble(x=seq(0, 10, by = .1), y=f(x)),

size = 1.1, linetype = "dashed", colour = "#AA2F2F") +

geom_point(data = df_sim) +

geom_segment(data = tibble(x = c(-Inf, 8.7),

xend = c(8.7, Inf),

y = c(-4.9, 4.3),

yend = c(-4.9, 4.3)),

mapping = aes(x=x, y=y, xend=xend, yend=yend),

colour = "#005A8B", size = 1.5)

Figure 4.16: Decision boundary of the grown tree.

Now let us vary the maxdepth and minsplit parameters over a loop. We can create a function that will return the decision boundaries (to be precise, it is not exactly the exact decision boundaries that is returned, but a very close approximation).

get_pred_part <- function(max_depth, min_split){

part_tree <-

rpart(y ~ x,

data = df_sim,

method = "anova",

minsplit = min_split,

maxdepth = max_depth,

cp = 0

)

tibble(

x = seq(0, 10, by = .1),

y = predict(part_tree, newdata = tibble(x=seq(0, 10, by = .1))),

max_depth = max_depth,

min_split = min_split

)

}We will loop over all the combinations that can be made up with the values for maxdepth and minsplit that we define, and apply the get_pred_part() at each iteration.

predicted_vals <-

list(

max_depth = c(1, 3, 5, 10),

min_split = c(2, 10, 20)) %>%

cross() %>%

map_df(purrr::lift(get_pred_part))

predicted_vals## # A tibble: 1,212 × 4

## x y max_depth min_split

## <dbl> <dbl> <dbl> <dbl>

## 1 0 -4.92 1 2

## 2 0.1 -4.92 1 2

## 3 0.2 -4.92 1 2

## 4 0.3 -4.92 1 2

## 5 0.4 -4.92 1 2

## 6 0.5 -4.92 1 2

## 7 0.6 -4.92 1 2

## 8 0.7 -4.92 1 2

## 9 0.8 -4.92 1 2

## 10 0.9 -4.92 1 2

## # … with 1,202 more rowslibrary(ggtext)

ggplot(mapping = aes(x = x, y = y)) +

geom_line(data = tibble(x=seq(0, 10, by = .1), y=f(x)),

size = 1.1, linetype = "dashed", colour = "#AA2F2F") +

geom_point(data = df_sim) +

geom_step(data = predicted_vals %>%

mutate(max_depth = factor(

max_depth,

levels = sort(unique(predicted_vals$max_depth)),

labels = str_c("Max depth: ",

sort(unique(predicted_vals$max_depth)))),

min_split = factor(

min_split,

levels = sort(unique(predicted_vals$min_split),

decreasing = TRUE),

labels = str_c("Min node size: ",

sort(unique(predicted_vals$min_split),

decreasing = TRUE)))

),

colour = "#005A8B", size = 1.5) +

facet_grid(max_depth~min_split) +

labs(title = str_c(

"Change in **<span style='color:#005A8B'>",

"decision boundaries</span>** induced by parameter variations")

) +

theme(

plot.title = element_markdown(lineheight = 1.1),

plot.title.position = "plot",

legend.text = element_markdown(size = 11)

)

Figure 4.17: Varying the parameters affect the decision boundary and may lead to overfitting.

In the previous graph, we clearly note that:

- if the maximum depth of the tree that is grown is 1, then the minimum node size does not play any role

- as long as we increase the maximum depth, the boundary decision gets closer to the points: there is a risk of overfitting

- as long as the number of minimum observations in a node for a split to be attempted decreases, the boundary decision gets closer to the points: there is once again a risk of overfitting.

4.3.2.1 Pruning

With the previous example, we saw that smaller trees lead to lower variance. They also lead to better interpretation. On the other hand, this is done at the expense of a little bias. We might be tempted to rely on growing small trees, by stropping the algorithm as soon as the decrease in the SSR due to the current split falls below some threshold. But this is too short-sided, as a poor split could be followed by a very good split.

Another strategy, called tree pruning, consists in growing a large tree, and then prune it back to keep only a subtree. To determine the level at which pruning is carried out, we can proceed by cross-validation. But instead of considering all trees, only a subset of those are considered. The cross-validation process is used to select a penalization parameter (\(\lambda\)). As explained in Emmanuel Flachaire’s course, we put a prize to pay for having a tree with many terminal nodes \(J\), or regions,

\[\min \sum_{j=1}^{J} \sum_{i \in R_j}(y_i-\overline{y}_{R_j})^2+ \lambda J.\]

With a value of \(\lambda=0\), the subtree we end up with is the large tree we grew in the first place. As long as we increase the value of \(\lambda\), having many terminal nodes \(J\) become costlier.

In {rpart}, the number of folds for the cross-validation is provided through the xval argument of the rpart.control() function. It can also be given directly to the rpart() function.

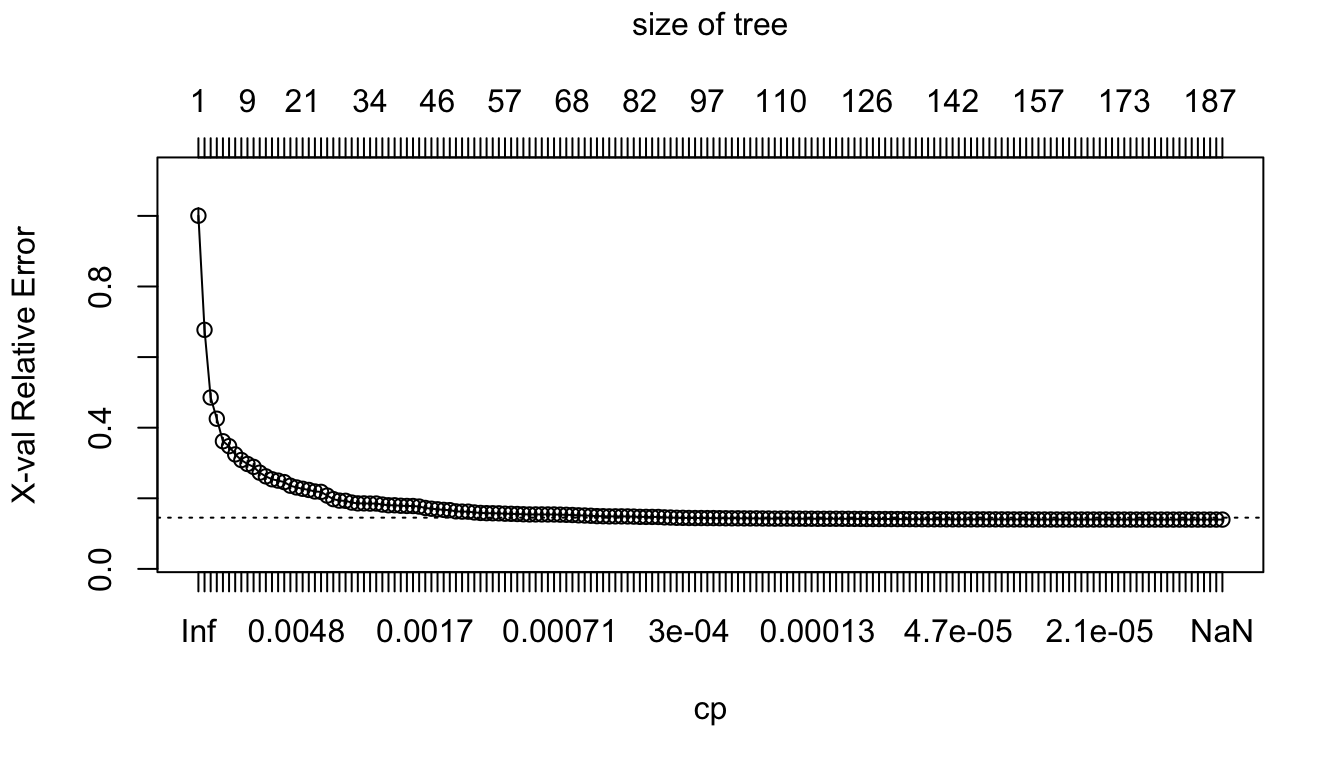

Let us perform a 10-fold cross validation with the Seoul bike data (this is actually done by default when calling the rpart() function).

set.seed(123)

large_tree <-

rpart(rented_bike_count ~.,

data = df_train %>% select(-y_binary, -date),

method = "anova",

control = rpart.control(xval = 10, cp = -1, minbucket = 20))

# Number of nodes

nodes_large_tree <- as.numeric(rownames(large_tree$frame))

max(rpart:::tree.depth(nodes_large_tree))## [1] 16We can plot the results of the cross-validation:

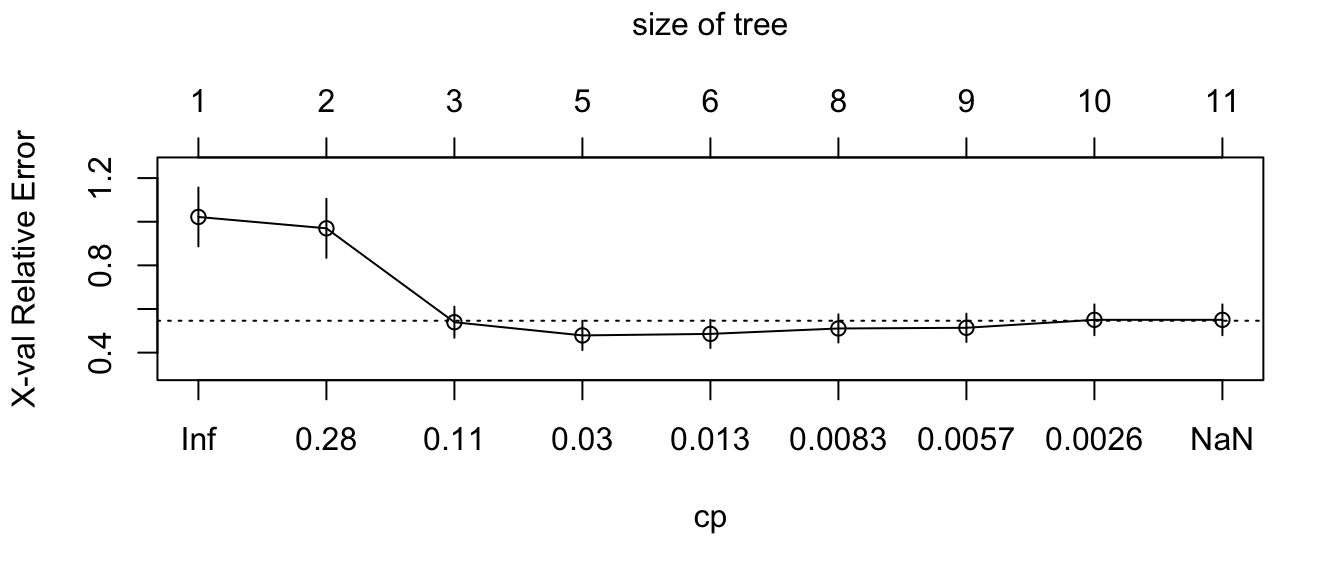

plotcp(large_tree)

Figure 4.18: Relative error depending on the complexity parameter (10-fold cross-validation results), for the Seoul bike data.

The results of the cross-validation are stored in the cptable attribute of the result. The column CP (cost-complexity) corresponds to different values of \(\lambda\), and the column xerror gives the resulting error.

head(large_tree$cptable)## CP nsplit rel error xerror xstd

## 1 0.33285801 0 1.0000000 1.0005565 0.021514547

## 2 0.19316259 1 0.6671420 0.6771662 0.015698709

## 3 0.07139607 2 0.4739794 0.4855051 0.012615119

## 4 0.05903069 3 0.4025833 0.4253927 0.010949168

## 5 0.01704578 4 0.3435527 0.3615918 0.009915513

## 6 0.01659517 5 0.3265069 0.3476690 0.009469073Let us find the minimum value of the cost-parameter \(\lambda\):

min_val <-

as_tibble(large_tree$cptable) %>%

arrange(xerror) %>%

slice(1)

min_val## # A tibble: 1 × 5

## CP nsplit `rel error` xerror xstd

## <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0.00000162 185 0.0973 0.140 0.00544Then, we can consider all the values of the cost-parameter within a 1-standard error deviation from that value:

candidates <-

as_tibble(large_tree$cptable) %>%

filter( (xerror > min_val$xerror-min_val$xstd) &

(xerror < min_val$xerror+min_val$xstd)) %>%

arrange(desc(CP))

candidates## # A tibble: 90 × 5

## CP nsplit `rel error` xerror xstd

## <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0.000314 91 0.106 0.145 0.00546

## 2 0.000311 92 0.106 0.145 0.00546

## 3 0.000295 93 0.106 0.144 0.00546

## 4 0.000295 94 0.105 0.144 0.00546

## 5 0.000294 95 0.105 0.144 0.00546

## 6 0.000286 96 0.105 0.144 0.00546

## 7 0.000285 97 0.104 0.144 0.00546

## 8 0.000269 98 0.104 0.144 0.00546

## 9 0.000262 99 0.104 0.143 0.00546

## 10 0.000259 100 0.104 0.143 0.00545

## # … with 80 more rowsAmong those candidates, we can pick up the values that results in the smallest subtree:

cp_val <-

candidates %>%

slice(1) %>%

magrittr::extract2("CP")

cp_val## [1] 0.0003139774Then, the tree can be pruned accordingly with this cost-complexity value, using the prune() function from {rpart}:

pruned_tree <- prune(large_tree, cp=cp_val)Let us check the depth of the resulting tree:

nodes_pruned_tree <- as.numeric(rownames(pruned_tree$frame))

max(rpart:::tree.depth(nodes_pruned_tree))## [1] 12And for comparison, let us grow a smaller tree:

small_tree <-

rpart(rented_bike_count ~.,

data = df_train %>% select(-y_binary, -date),

method = "anova",

maxdepth = 2,

cp = 0,

xval = 0)

# Number of nodes

nodes_small_tree <- as.numeric(rownames(small_tree$frame))

max(rpart:::tree.depth(nodes_small_tree))## [1] 2Let us compute the MSE on the test set:

pred_test_small <- predict(small_tree, newdata = df_test)

pred_test_large <- predict(large_tree, newdata = df_test)

pred_test_pruned <- predict(pruned_tree, newdata = df_test)

compute_mse <- function(observed, predicted){

mean((observed-predicted)^2)

}

mse_small_tree <-

compute_mse(observed = df_test$rented_bike_count,

predicted = pred_test_small)

mse_large_tree <-

compute_mse(observed = df_test$rented_bike_count,

predicted = pred_test_large)

mse_pruned_tree <-

compute_mse(observed = df_test$rented_bike_count,

predicted = pred_test_pruned)

mse <- scales::number(c(mse_small_tree, mse_large_tree,

mse_pruned_tree))

names(mse) <- c("Small", "Large", "Pruned")

mse## Small Large Pruned

## "295 291" "164 097" "168 395"Here, pruning the tree unfortunately produced a higher MSE in the test set. Let us have another look at pruning with our synthetic dataset this time.

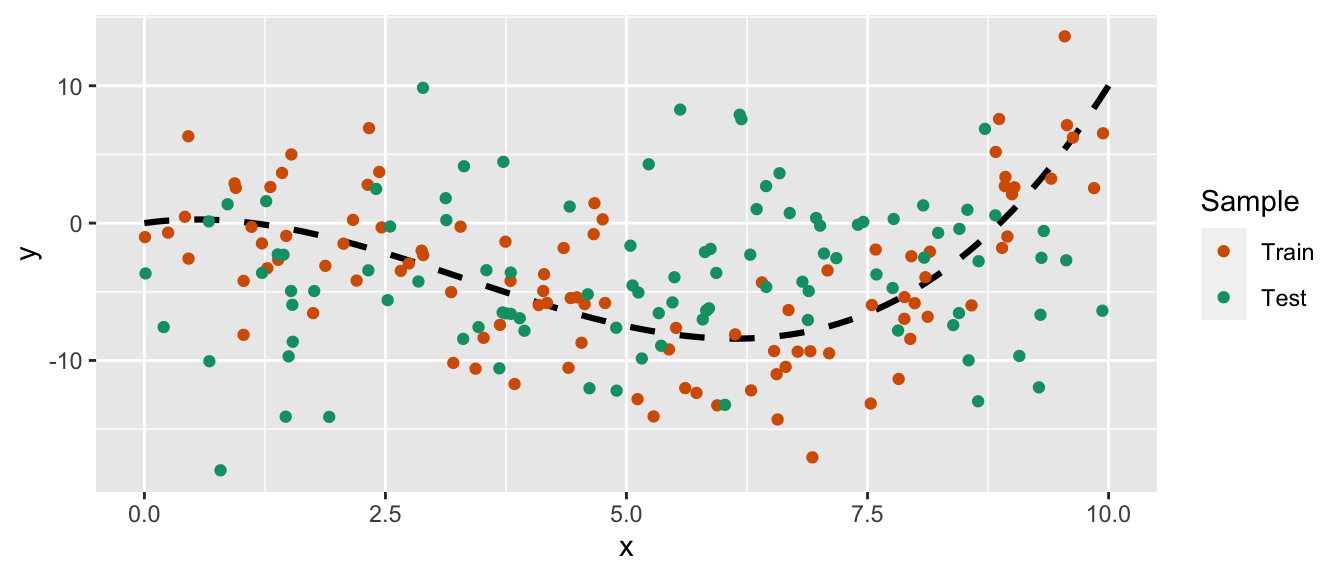

Let us draw some new observations from the same data generating process.

set.seed(234)

x_new <- runif(n=n, min=0, max=10)

eps <- rnorm(n, 0, 4)

y_new <- f(x)+eps

df_sim_new <- tibble(x=x_new, y=y_new)

ggplot(mapping = aes(x = x, y = y)) +

geom_line(data = tibble(x=seq(0, 10, by = .1), y=f(x)),

colour = "black", size = 1.1, linetype = "dashed") +

geom_point(data = df_sim %>% mutate(sample = "Train") %>%

bind_rows(

df_sim_new %>% mutate(sample = "Test")

),

mapping = aes(colour = sample)) +

scale_colour_manual("Sample",

values = c("Train" = "#D55E00", "Test" = "#009E73"))

Figure 4.19: Synthetic data.

Let us build a large tree using the training sample:

part_tree_large <-

rpart(y ~ x,

data = df_sim,

method = "anova",

control = rpart.control(

xval = 10,

cp = -1,

minbucket = 5)

)

rpart.plot(part_tree_large)

Figure 4.20: Unpruned tree.

The cost-parameter can be chosen thanks to the 10-fold cross-validation that was performed:

plotcp(part_tree_large)

Figure 4.21: Relative error depending on the complexity parameter, for the synthetic data.

The same procedure to select \(\lambda\) as that used on the Seoul bike data can be done. In a first step, we select the value that minimises the error:

min_val <-

as_tibble(part_tree_large$cptable) %>%

arrange(xerror) %>%

slice(1)

min_val## # A tibble: 1 × 5

## CP nsplit `rel error` xerror xstd

## <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0.0185 4 0.344 0.479 0.0673Then, we consider all values within a 1-standard error:

candidates <-

as_tibble(part_tree_large$cptable) %>%

filter( (xerror > min_val$xerror-min_val$xstd) &

(xerror < min_val$xerror+min_val$xstd)) %>%

arrange(desc(CP))

candidates## # A tibble: 5 × 5

## CP nsplit `rel error` xerror xstd

## <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 0.0482 2 0.440 0.539 0.0711

## 2 0.0185 4 0.344 0.479 0.0673

## 3 0.00927 5 0.325 0.486 0.0647

## 4 0.00749 7 0.307 0.511 0.0646

## 5 0.00429 8 0.299 0.514 0.0649And finally, we pick the values among the candidates that produces the shallowest tree:

cp_val <-

candidates %>%

slice(1) %>%

magrittr::extract2("CP")

cp_val## [1] 0.04824351We can then prune the large tree:

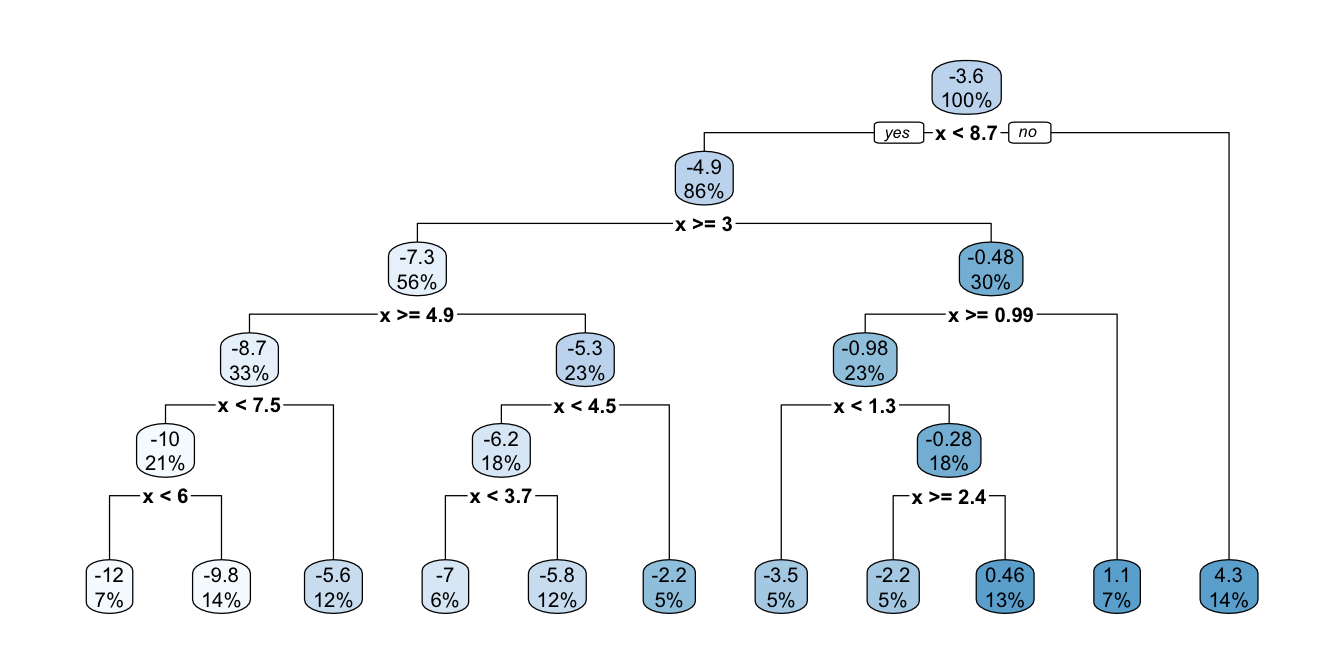

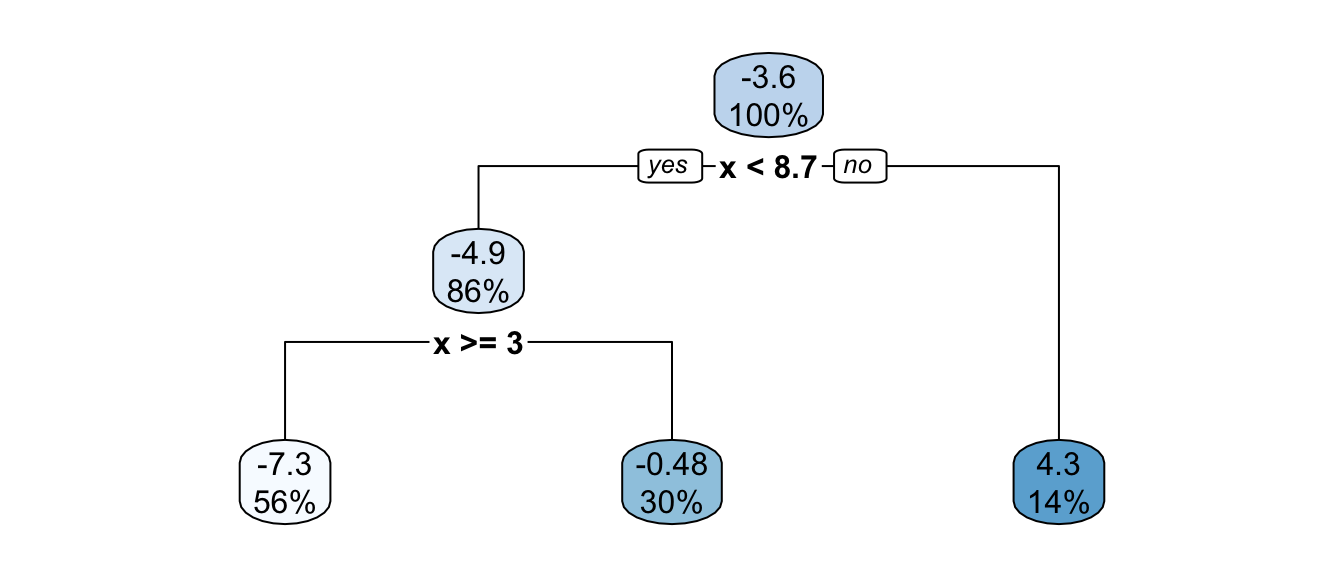

pruned_tree <- prune(part_tree_large, cp=cp_val)

rpart.plot(pruned_tree)

Figure 4.22: Pruned tree, synthetic data.

The depth of the pruned tree is indeed smaller:

nodes_pruned_tree <- as.numeric(rownames(pruned_tree$frame))

max(rpart:::tree.depth(nodes_pruned_tree))## [1] 2Let us visualise the boundaries of each tree:

boundaries <-

tibble(

x = seq(0, 10, by = .1),

y = predict(part_tree_large,

newdata = tibble(x=seq(0, 10, by = .1)))

)

boundaries_pruned <-

tibble(

x = seq(0, 10, by = .1),

y = predict(pruned_tree,

newdata = tibble(x=seq(0, 10, by = .1)))

)

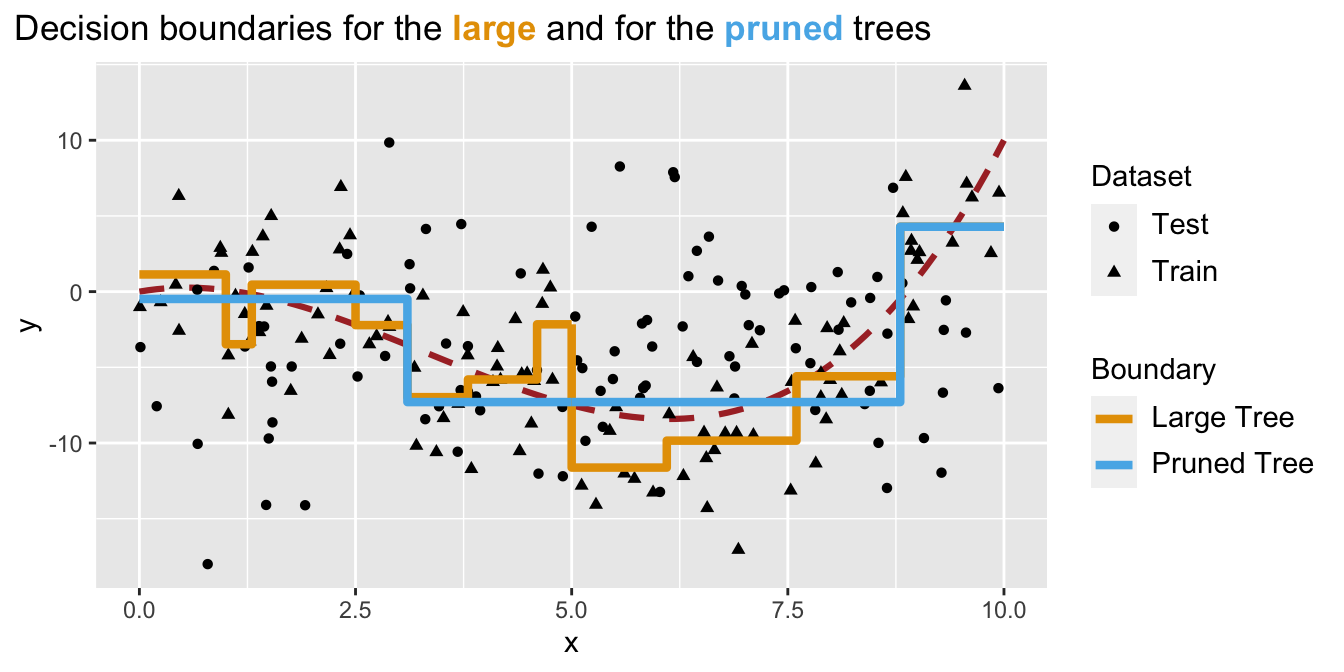

ggplot(mapping = aes(x = x, y = y)) +

geom_line(data = tibble(x=seq(0, 10, by = .1), y=f(x)),

size = 1.1, linetype = "dashed", colour = "#AA2F2F") +

geom_point(data = df_sim %>% mutate(sample = "Train") %>%

bind_rows(

df_sim_new %>% mutate(sample = "Test")

),

mapping = aes(shape = sample)) +

geom_step(data = boundaries %>% mutate(model = "Large Tree") %>%

bind_rows(

boundaries_pruned %>% mutate(model = "Pruned Tree")

),

mapping = aes(colour = model),

# colour = "#005A8B",

size = 1.5) +

labs(title = str_c(

"Decision boundaries for the **<span style='color:#E69F00'>",

"large</span>** and for the **<span style='color:#56B4E9'>",

"pruned</span>** trees")

) +

scale_colour_manual("Boundary",

values = c("Large Tree" = "#E69F00",

"Pruned Tree" = "#56B4E9")) +

scale_shape_discrete("Dataset") +

theme(

plot.title = element_markdown(lineheight = 1.1),

plot.title.position = "plot",

legend.text = element_markdown(size = 11)

)

Figure 4.23: Decision boundaries are different after the tree was pruned.

Now, using these boundaries, let us compute the MSE on the unseen data (test set):

pred_test_large <- predict(part_tree_large, newdata = df_sim_new)

pred_test_pruned <- predict(pruned_tree, newdata = df_sim_new)

compute_mse <- function(observed, predicted){

mean((observed-predicted)^2)

}

mse_large_tree <-

compute_mse(observed = df_sim_new$y, predicted = pred_test_large)

mse_pruned_tree <-

compute_mse(observed = df_sim_new$y, predicted = pred_test_pruned)

mse <- scales::number(c(mse_large_tree, mse_pruned_tree))

names(mse) <- c("Large", "Pruned")

print("MSE : \n")## [1] "MSE : \n"mse## Large Pruned

## "65" "47"We avoided overfitting by pruning the tree and ended-up with a lower MSE on the test.

4.3.3 Classification Trees

So far, we have considered a numerical target/response variable. Let us now consider the case where the predictor is categorical. This implies two changes:

- the criterion used to select the variable/cutoff pairs can no longer be the RSS

- we can use another metric, such as the classification error rate for example

- or the Gini index, or the Entropy

- the prediction made for each partition is no longer the average of the response variable

- we can rely on a voting rule based on the proportions of the categories: for example, the proportions of each class can considered as the probabilities that the observations of a partition belong to each corresponding class.

Instead of using the classification error, it is more common to either use the Gini impurity index or the entropy to select the pair variable/cutoff that will be used to make a split.

The Gini impurity index at some node \(\mathcal{N}\), as reminded in Emmanuel Flachaire’s course, is given by:

\[G(\mathcal{N}) = \sum_{k=1}^{K} p_k(1-p_k)= 1-\sum_{k=1}^{K} p_k^2,\]

where \(p_k\) is the fraction of observations (or training samples) labeled with class \(k\) in the node. If all the \(p_k\) are close to 0, or to 1, the Gini impurity index has a small value: in such cases, there will be mostly observations from the same class, the node will be homogeneous. The Gini impurity index thus gives an idea of the total variance across the \(K\) classes in a node.

Entropy is defined as follows: \[E(\mathcal{N}) = -\sum_{k=1}^K p_k \log(p_k)\] If the \(p_k\) are all near 0 or near 1, the entropy will also be near 0.

After a split into two leaves \(\mathcal{N}_{L}\) and \(\mathcal{N}_{R}\), the Gini impurity index becomes:

\[G(\mathcal{N}_{L}, \mathcal{N}_{R}) = p_L G(\mathcal{N}_L) + p_R G(\mathcal{N}_R),\] where \(p_L\) and \(p_R\) are the proportion of observations in \(\mathcal{N}_{L}\) and \(\mathcal{N}_{R}\)

In a similar fashion, the entropy becomes:

\[E(\mathcal{N}_{L}, \mathcal{N}_{R}) = p_L E(\mathcal{N}_L) + p_R E(\mathcal{N}_R).\]

Splits can be done as long as they substantially decrease impurity, i.e., when

\[\Delta = G(\mathcal{N}) - G(\mathcal{N}_L, \mathcal{N}_R) > \epsilon,\]

where \(\epsilon\) is a threshold value set by the user.

The choice of the pair variable/cutoff can be done so as to select the one that minimises the impurity, i.e., the one that maximises \(\Delta\).

Let us look at an example on the Seoul bike data. First, let us look at how some little changes need to be made when calling the rpart() function.

With regression trees, the argument method was set to "anova". Now that the variable of interest is categorical, we need to change the value to "class". By default, the Gini impurity index is used as the splitting index. If we want to use entropy, we can feed the argument parms with a list with the element split equal to "class".

Let us build a tree to predict our binary variable (recall that it is equal to "High" if the number of hourly bikes is greater than 300 and "Low" otherwise). Let us make sure that at least 20 observations are in a node before a split is attempted (minsplit = 20) and that there are at least 7 observations in each terminal leaves (minbucket = 7).

classif_tree_gini <-

rpart(y_binary ~.,

data = df_train %>% select(-rented_bike_count, -date),

method = "class",

minsplit = 20,

minbucket = 7,

parms = list(split = "gini"))The tree can be visualised thanks to the rpart.plot() function, as in the case of a regression tree. The argument extra allows to change the type of information reported on the graph. Here, since we have a binary response variable, the value is automatically set to 106 if not specified differently. It is made of two parts:

6: the probability of the second class only is reported+100: the percentage of observations in the node is also added

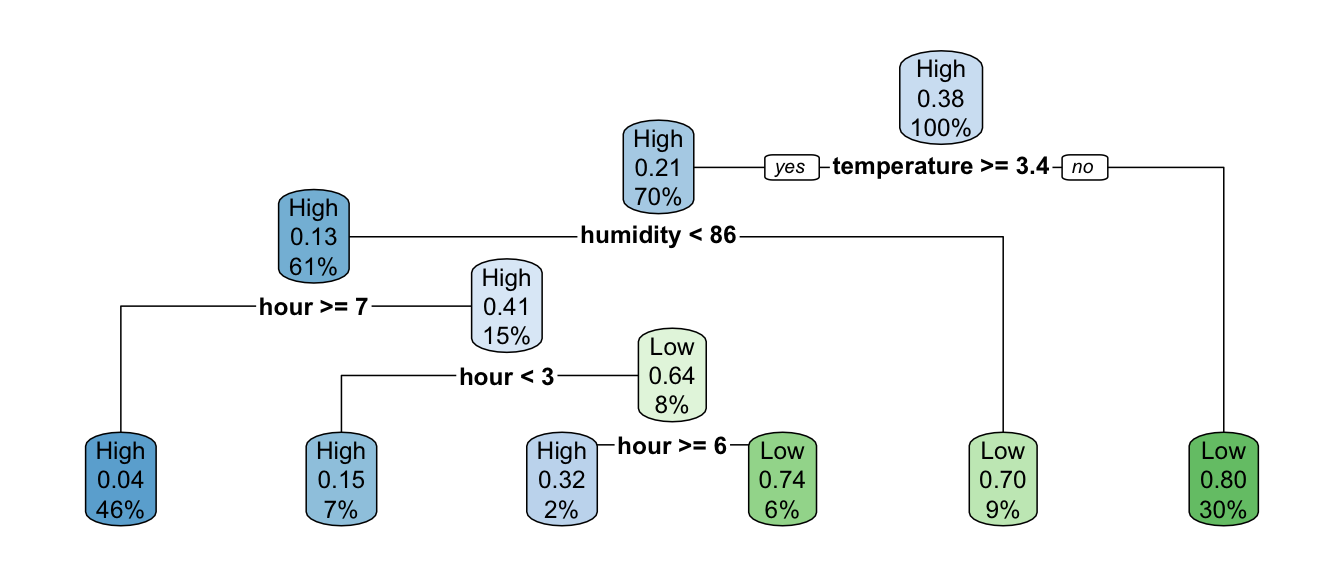

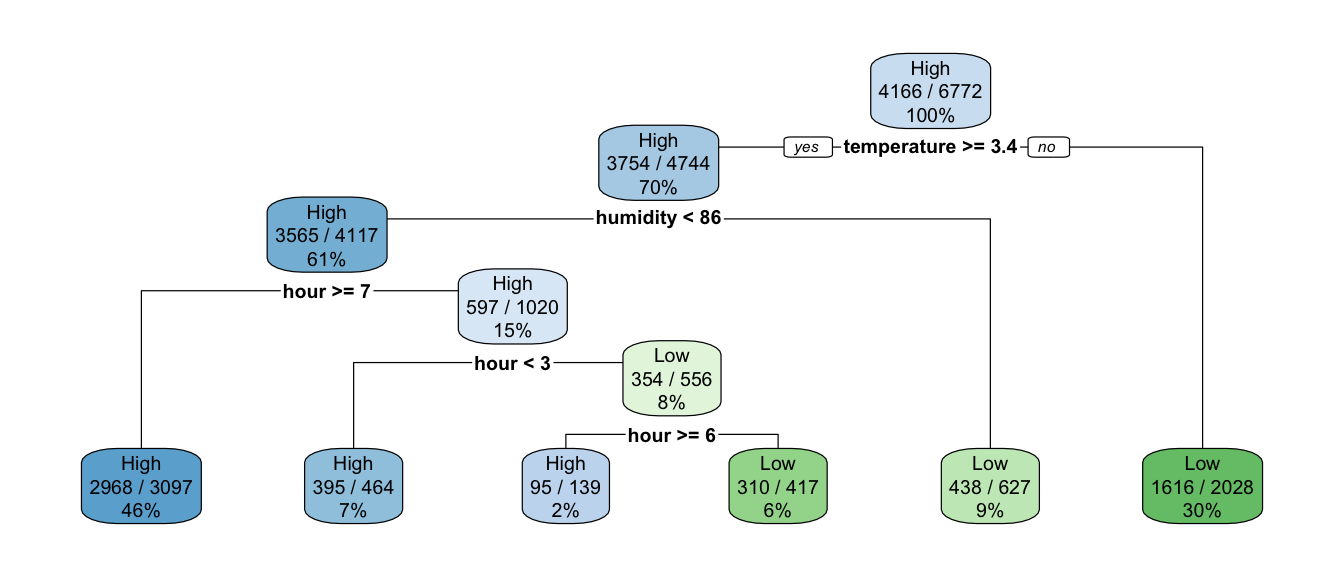

rpart.plot(classif_tree_gini, extra = 106)

Figure 4.24: A first classification tree grown on Seoul bike data.

Here, at the top of the tree where 100% of observations are in the node, we read on the graph that the probability to be predicted as the second class (Low) is 0.38. This corresponds to the percentage of Low observations in the training dataset.

prop.table(table(df_train$y_binary))##

## High Low

## 0.6151802 0.3848198If the temperature is greater than or equal to 3.35 (we go to the left), we are left with 70% of the observations. In the resulting node, the probability to be classified as the second class (Low) is estimated at 0.21. This means that 21% of the observations in that node are labelled as Low:

df_train %>%

filter(temperature >= 3.35) %>%

group_by(y_binary) %>%

count() %>%

ungroup() %>%

mutate(prop = round(n/sum(n), 2))## # A tibble: 2 × 3

## y_binary n prop

## <chr> <int> <dbl>

## 1 High 3754 0.79

## 2 Low 990 0.21This concerns 70% of the observations:

df_train %>%

group_by(temperature < 3.35) %>%

count() %>%

ungroup() %>%

mutate(prop = n / sum(n))## # A tibble: 2 × 3

## `temperature < 3.35` n prop

## <lgl> <int> <dbl>

## 1 FALSE 4744 0.701

## 2 TRUE 2028 0.299If the temperature is strictly lower than 3.35 (we go to the right), which concerns the remaining 30% of the observations, there are 20% of observations label with the first class (High) and 80%with the second (Low):

df_train %>%

filter(temperature < 3.35) %>%

group_by(y_binary) %>%

count() %>%

ungroup() %>%

mutate(prop = round(n/sum(n), 2))## # A tibble: 2 × 3

## y_binary n prop

## <chr> <int> <dbl>

## 1 High 412 0.2

## 2 Low 1616 0.8This is a terminal leaf node, so any observation that falls in it, the predicted class will be that of the most frequent class in that node. With this tree, if an observation has temperature >=3.35:

- the probability that the number of bikes is of class “High” returned by the model will be 0.2

- the probability that the number of bikes is of class “Low” returned by the model will be 0.8.

When calling the plot, we set extra=106. As \(106 \mod 100 = 6\), only the probability of the second class is repord. Here is the list of available values that can be used (this list is extracted from the help page of the rpart() function):

1: the number of observations that fall in the node2: the classification rate at the node (number of correct classifications and the number of observations in the node)3: misclassification rate at the node (number of incorrect classifications and the number of observations in the node)4: probability per class of observations in the node (conditioned on the node, sum across a node is 1)5: like 4 but don’t display the fitted class6: probability of the second class only. Useful for binary responses (the one we used)7: like 6 but don’t display the fitted class8: probability of the fitted class9: probability relative to all observations – the sum of these probabilities across all leaves is 1. This is in contrast to the options above, which give the probability relative to observations falling in the node – the sum of the probabilities across the node is 110: like 9 but display the probability of the second class only. (Useful for binary responses).11: like 10 but don’t display the fitted class

rpart.plot(classif_tree_gini, extra = 102)

Figure 4.25: Showing the classification rate at the node.

If we want to use entropy instead of the Gini impurity index as the splitting index, all we need to do is to change the value of the element split in the list given to the parms argument so that it becomes "information" instead of "gini".

classif_tree_entropy <-

rpart(y_binary ~.,

data = df_train %>% select(-rented_bike_count, -date),

method = "class",

parms = list(split = "information"))

rpart.plot(classif_tree_entropy)

Figure 4.26: Classification tree build using entropy instead of gini to measure impurity index.

Similarly to what we did with regression trees, let us have a closer look at how the splitting rule is performed. Let us consider temperature as the splitting variable, and let us set a cutoff value of 10.

variable_split <- "temperature"

threshold <- 10Let us consider the root as the current node:

current_node <- df_trainThe proportion of each class, \(p_k\), can be computed as follows:

prop_current_node <-

current_node %>%

group_by(y_binary) %>%

count() %>%

ungroup() %>%

mutate(p_k = n / sum(n))

prop_current_node## # A tibble: 2 × 3

## y_binary n p_k

## <chr> <int> <dbl>

## 1 High 4166 0.615

## 2 Low 2606 0.385The Gini impurity index in that current node is:

gini_node <-

prop_current_node %>%

mutate(g_k = p_k*(1-p_k)) %>%

magrittr::extract2("g_k") %>%

sum()

gini_node## [1] 0.4734671Let us define a function that computes the Gini impurity index on a given node:

#' @param data_node tibble/data.frame with the data of the node

#' @param target name of the target variable (discrete variable)

compute_gini <- function(data_node, target){

prop_current_node <-

data_node %>%

group_by(!!sym(target)) %>%

count() %>%

ungroup() %>%

mutate(p_k = n / sum(n))

prop_current_node %>%

mutate(g_k = p_k*(1-p_k)) %>%

magrittr::extract2("g_k") %>%

sum()

}Which can be used this way:

compute_gini(current_node, "y_binary")## [1] 0.4734671Let us split the data into two partitions, depending on the splitting variable and the tested cutoff:

left_node <-

current_node %>%

filter(temperature < threshold)

right_node <-

current_node %>%

filter(temperature >= threshold)The proportions of observations in each node \(p_L\) and \(p_R\) can be computed as follows:

p_L <- nrow(left_node) / nrow(current_node)

p_R <- nrow(right_node) / nrow(current_node)

cat(str_c("Prop left: ", p_L, "\n", "Prop right: ", p_R))## Prop left: 0.427495569994093

## Prop right: 0.572504430005907The Gini impurity coefficient in left and right nodes:

gini_left <- compute_gini(left_node, "y_binary")

gini_right <- compute_gini(right_node, "y_binary")

c(gini_left, gini_right)## [1] 0.4353491 0.2749603The weighted average of the Gini impurity indices:

gini_l_r <- p_L*gini_left + p_R * gini_right

gini_l_r## [1] 0.3435258Let us recap:

- the Gini impurity index in the current node is equal to 0.4734671

- the Gini impurity index after the split is equal to 0.3435258

Hence, the decrease in impurity (\(\Delta\)) after the split is equal to:

gini_node - gini_l_r## [1] 0.1299412Equivalently, the split allowed to reduce the impurity by -0.27%:

(gini_l_r - gini_node)/gini_node## [1] -0.2744462Let us wrap-up the previous code in a function:

gini_split <- function(data_node, variable_to_predict,

variable_split, threshold,

minbucket){

if(is.numeric(data_node[[variable_split]])){

left_node <-

data_node %>%

filter(!!sym(variable_split) < threshold)

right_node <-

data_node %>%

filter(!!sym(variable_split) >= threshold)

}else{

left_node <-

data_node %>%

filter(!!sym(variable_split) %in% threshold)

right_node <-

data_node %>%

filter( !( !!sym(variable_split) %in% threshold ))

}

# If there is less than a given number of obs in the leaves

# warning

warning_min_bucket <-

any( table(left_node[[variable_to_predict]]) < minbucket) |

any( table(right_node[[variable_to_predict]]) < minbucket)

# Proportions of obs in the resulting leaves

p_L <- nrow(left_node) / nrow(current_node)

p_R <- nrow(right_node) / nrow(current_node)

# Gini in leaves

gini_left <- compute_gini(left_node, "y_binary")

gini_right <- compute_gini(right_node, "y_binary")

gini_l_r <- p_L*gini_left + p_R * gini_right

list(gini_l_r = gini_l_r, warning_min_bucket = warning_min_bucket)

}This function can then be applied as follows:

gini_split(

data_node = df_train, variable_to_predict = "y_binary",

variable_split = "temperature", threshold = 10, minbucket = 7)## $gini_l_r

## [1] 0.3435258

##

## $warning_min_bucket

## [1] FALSELet us consider multiple cutoffs:

number_of_cuts <- 1000

thresholds <-

seq(min(df_train$temperature), max(df_train$temperature),

length.out = number_of_cuts)We can loop over these values of cutoff.

gini_leaves <-

map(thresholds, ~gini_split(

data_node = df_train, variable_to_predict = "y_binary",

variable_split = "temperature", threshold = ., minbucket = 7))The resulting values of \(G(\mathcal{N}_L, \mathcal{N}_R)\) can be stored in a table:

gini_leaves_df <-

tibble(

g_leaves = map_dbl(gini_leaves, "gini_l_r"),

warning_min_bucket = map_lgl(gini_leaves, "warning_min_bucket"),

threshold = thresholds) %>%

mutate(delta = gini_node - g_leaves,

warning_min_bucket = factor(

warning_min_bucket, levels = c(TRUE, FALSE),

labels = c("Not permitted", "Permitted")))

gini_leaves_df## # A tibble: 1,000 × 4

## g_leaves warning_min_bucket threshold delta

## <dbl> <fct> <dbl> <dbl>

## 1 0.473 Permitted -17.8 0

## 2 0.473 Not permitted -17.7 0.0000437

## 3 0.473 Not permitted -17.7 0.0000437

## 4 0.473 Not permitted -17.6 0.0000437

## 5 0.473 Not permitted -17.6 0.0000437

## 6 0.473 Not permitted -17.5 0.0000437

## 7 0.473 Not permitted -17.5 0.0000704

## 8 0.473 Not permitted -17.4 0.000158

## 9 0.473 Not permitted -17.3 0.000158

## 10 0.473 Not permitted -17.3 0.000158

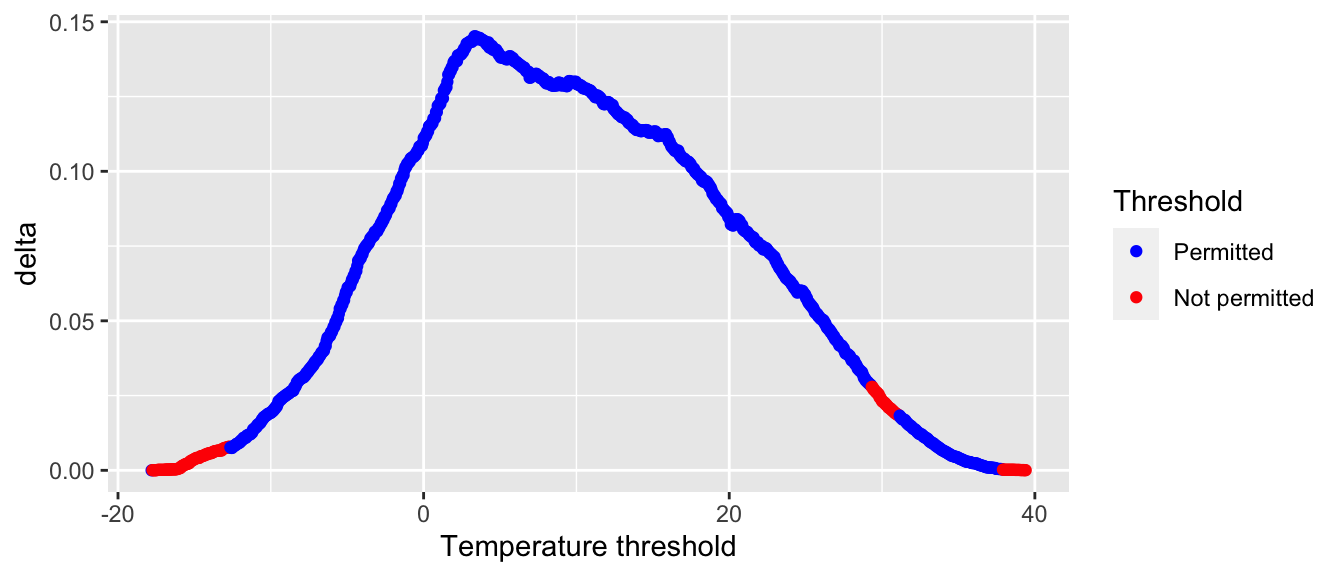

## # … with 990 more rowsWhen the threshold lead to a split in which there were too few observations (less than 7) in a leaf, we labelled the result as “Not permitted” in the warming_min_bucket column. The improvement obtained with each tested cutoff value can be visualised on a graph:

ggplot(data = gini_leaves_df,

mapping = aes(x = threshold, y = delta)) +

geom_point(mapping = aes(colour = warning_min_bucket)) +

scale_colour_manual(

"Threshold",

values = c("Permitted" = "blue", "Not permitted" = "red")) +

labs(x = "Temperature threshold") +

theme(plot.title.position = "plot")

Figure 4.27: Decrease in the impurity design.

gini_leaves_df %>%

filter(warning_min_bucket == "Permitted") %>%

arrange(desc(delta))## # A tibble: 852 × 4

## g_leaves warning_min_bucket threshold delta

## <dbl> <fct> <dbl> <dbl>

## 1 0.328 Permitted 3.33 0.145

## 2 0.328 Permitted 3.39 0.145

## 3 0.329 Permitted 3.44 0.145

## 4 0.329 Permitted 3.50 0.145

## 5 0.329 Permitted 3.61 0.145

## 6 0.329 Permitted 3.67 0.145

## 7 0.329 Permitted 3.73 0.144

## 8 0.329 Permitted 3.79 0.144

## 9 0.329 Permitted 3.56 0.144

## 10 0.330 Permitted 3.84 0.144

## # … with 842 more rowsThe best threshold for the split made with temperature is the one, among the permitted solutions, that leads to the highest impurity decrease:

gini_l_r_temperature <-

gini_leaves_df %>%

filter(warning_min_bucket == "Permitted") %>%

arrange(desc(delta))

gini_l_r_temperature## # A tibble: 852 × 4

## g_leaves warning_min_bucket threshold delta

## <dbl> <fct> <dbl> <dbl>

## 1 0.328 Permitted 3.33 0.145

## 2 0.328 Permitted 3.39 0.145

## 3 0.329 Permitted 3.44 0.145

## 4 0.329 Permitted 3.50 0.145

## 5 0.329 Permitted 3.61 0.145

## 6 0.329 Permitted 3.67 0.145

## 7 0.329 Permitted 3.73 0.144

## 8 0.329 Permitted 3.79 0.144

## 9 0.329 Permitted 3.56 0.144

## 10 0.330 Permitted 3.84 0.144

## # … with 842 more rowsWe obtain a value very close to that reported in the graph (the specific 3.35 value was not tested in our sequence of cutoffs).

To be exhaustive, we should consider all other variables, and not only temperature, to select the best pair of variable/cutoff to perform the split.

If we look at the value reported in the improve column of the splits element returned by the rpart() function, we actually get \(n_\mathcal{I} \times \Delta\), where \(n_\mathcal{I}\) is the number of observations in the current node.

head(classif_tree_gini$splits)## count ncat improve index adj

## temperature 6772 1 982.9149704 3.35 0.0000000

## seasons 6772 4 793.1418098 1.00 0.0000000

## month 6772 12 793.1418098 2.00 0.0000000

## dew_point_temperature 6772 1 539.9467740 2.65 0.0000000

## hour 6772 1 434.9381343 6.50 0.0000000

## seasons 0 4 0.9208506 3.00 0.7357002Compared with:

nrow(current_node) * (gini_node-gini_l_r_temperature$g_leaves[1])## [1] 982.915More explanations on the rows of that table will be provided afterwards in this section and in the variable importance section.

Let us just give a few examples of other competitor variables. The element splits returned by the rpart() function contains, among other things, some information relative to those competitor variables. In our example, the first five rows of this split table report the improvement in the fit depending on the variable used to make the split (the value reported concerns the best cutoff for each variable tested).

head(classif_tree_gini$splits, 5)## count ncat improve index adj

## temperature 6772 1 982.9150 3.35 0

## seasons 6772 4 793.1418 1.00 0

## month 6772 12 793.1418 2.00 0

## dew_point_temperature 6772 1 539.9468 2.65 0

## hour 6772 1 434.9381 6.50 0Let us recall that the value in the improve column correspond to \(n_\mathcal{I} \times \Delta\). The Gini impurity index in the root node is:

(gini_initial <- compute_gini(df_train, "y_binary"))## [1] 0.4734671If we use the temperature variable as the splitting one, the increase in the goodness of fit (as measured by the average of the Gini impurity index computed on the two resulting leaves) can be obtained with the following code:

gini_split_temperature <-

gini_split(

data_node = df_train, variable_to_predict = "y_binary",

variable_split = "temperature", threshold = 3.35, minbucket = 7)

nrow(df_train) * (gini_initial - gini_split_temperature$gini_l_r)## [1] 982.915For the variable seasons:

gini_split_seasons <-

gini_split(

data_node = df_train, variable_to_predict = "y_binary",

variable_split = "seasons", threshold = c("Winter"), minbucket = 7)

nrow(df_train) * (gini_initial - gini_split_seasons$gini_l_r)## [1] 793.1418For the variable month:

gini_split_month <-

gini_split(

data_node = df_train, variable_to_predict = "y_binary",

variable_split = "month", threshold = c("Jan","Feb","Dec"), minbucket = 7)

nrow(df_train) * (gini_initial - gini_split_month$gini_l_r)## [1] 793.1418and so on.

4.3.4 Variable Importance

For a given node, the variable that is eventually used to perform the split is called a primary variable. We have seen that the choice of the primary split is done using a metric that computes the goodness of fit (for example, the Gini Index or the Impurity index in the case of a classification tree).

To compute the importance of a variable, we can also consider the surrogate variables. These variables are used to try to handle missing values. Once a primary split has been obtained, another variable can be used to try to obtain a similar assignment in the left and right nodes. If there are missing values when we use the primary split, then a surrogate split can be used to make the prediction. The surrogate variable may thus be relatively important if they are able to reproduce more or less the same results as the split obtained with the primary variable.

Let us have a quick look at how to measure the capacity of a surrogate variable to reproduce the same results as that obtained with a primary variable. To do so, we depart from what is explained in Section 3.4 of the vignette of {rpart}.

Once again, let us focus on the root node.

current_node <- df_trainThe primary variable for that split, is temperature, and the best cutoff is 3.35. Let us use the variable seasons as a surrogate. Among all the possible combinations of splitting rules for seasons, the one that gives the best results in terms of error rate is "Winter".

tmp <-

current_node %>%

mutate(

# Primary split

leave_primary = case_when(

temperature < 3.35 ~ "left prim.",

temperature >= 3.35 ~ "right prim."),

# Surrogate split

leave_surrogate = case_when(

seasons == "Winter" ~ "left surr.",

seasons != "Winter" ~ "right surr."

))Let us look at the results:

confusion <- table(tmp$leave_primary, tmp$leave_surrogate, exclude = NULL)

confusion##

## left surr. right surr.

## left prim. 1826 202

## right prim. 334 4410Using the surrogate, 1826 + 4410 = 6236 out of the 6772 observations are sent to the correct direction. This corresponds to an average proportion of correctly sent observations of 0.9208506.

correct_direction <- (confusion["left prim.", "left surr."] + confusion["right prim.", "right surr."])

correct_direction## [1] 6236# Proportion

correct_direction/sum(confusion)## [1] 0.9208506The majority rule gets 4744 correct:

maj_rule_correct <- sum(confusion["right prim.", ])

maj_rule_correct## [1] 4744Hence, the adjusted agreement is:

adjust <- (correct_direction - maj_rule_correct) / (sum(confusion) - maj_rule_correct)

adjust## [1] 0.7357002The relative importance of a variable is computed by accounting for when it appears as a primary variable, and also when it appears as a surrogate variable.

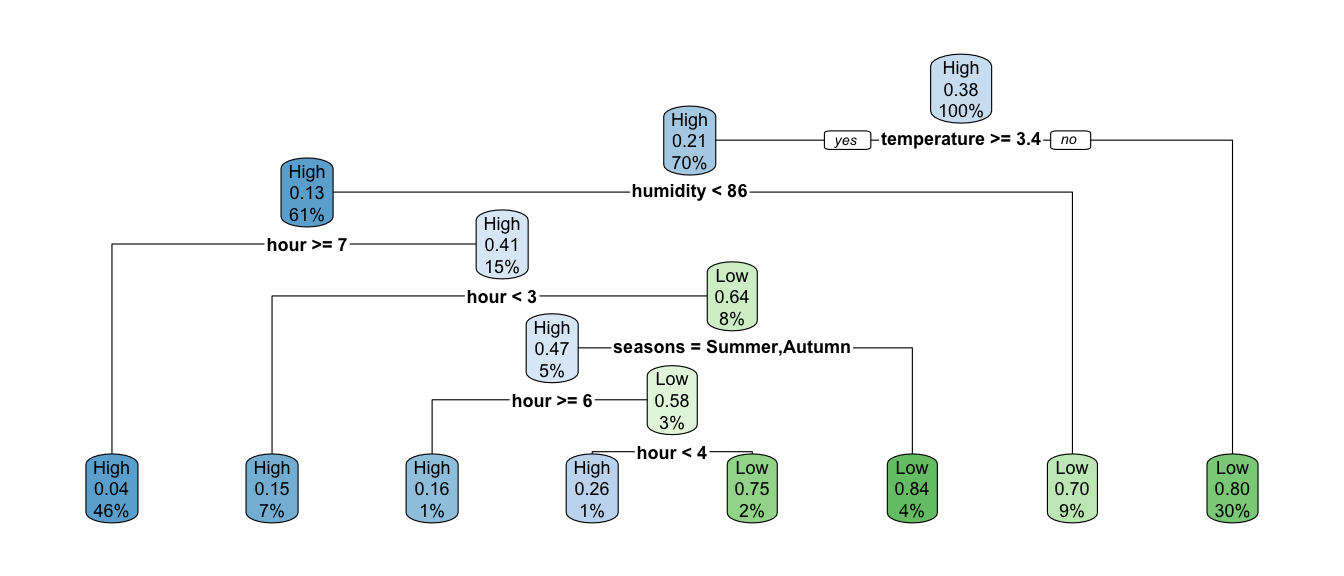

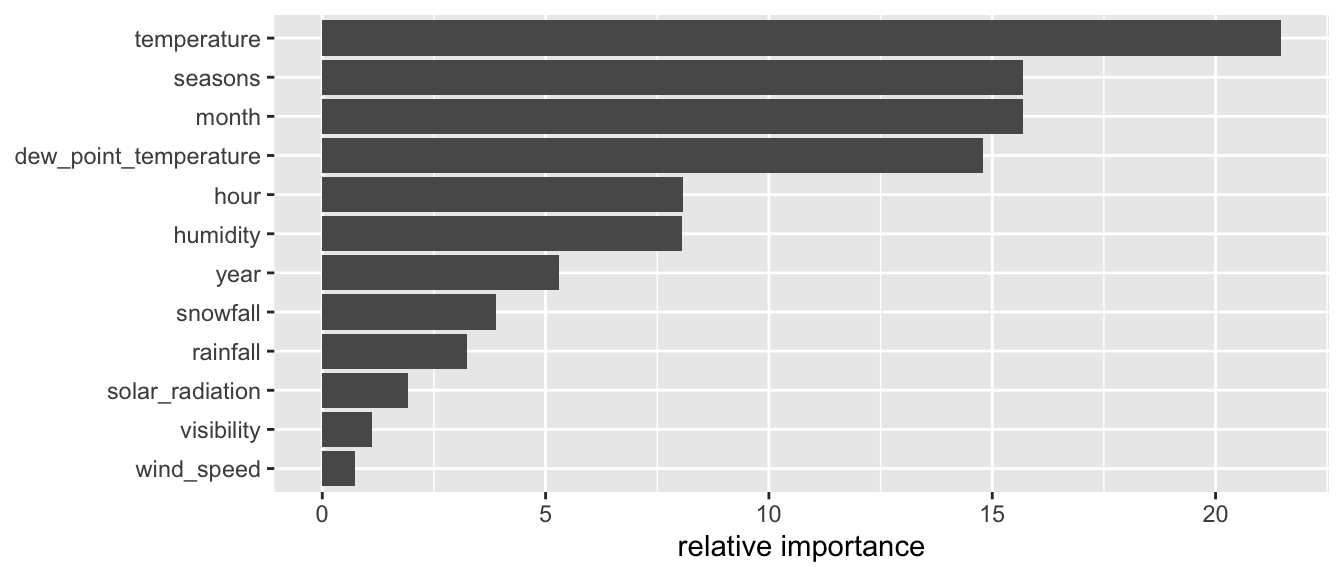

The composite metrics can be accessed through the variable.importance element of the result returned by the rpart() function.

classif_tree_gini$variable.importance## temperature month seasons

## 989.14552 723.13074 723.13074

## dew_point_temperature hour humidity

## 681.60244 372.01312 371.05659

## year snowfall rainfall

## 244.27473 179.32867 148.96535

## solar_radiation visibility wind_speed

## 88.03473 51.16607 34.21947When we call the summary() function on the result of the rpart() function, we get rounded values of the relative importance of the variables:

round(100 * classif_tree_gini$variable.importance /

sum(classif_tree_gini$variable.importance))## temperature month seasons

## 21 16 16

## dew_point_temperature hour humidity

## 15 8 8

## year snowfall rainfall

## 5 4 3

## solar_radiation visibility wind_speed

## 2 1 1The only thing that remains is to understand how those values are obtained. To that end, we need to better understand the content of the split element that is returned by the rpart() function. In this table, primary variables, competitor variables (variables that would have produce a relatively close result in term of increase in the goodness of fit), and surrogate variables are given in a jumble.

splits_df <-

as_tibble(classif_tree_gini$splits, rownames = "variable")

splits_df## # A tibble: 43 × 6

## variable count ncat improve index adj

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl>

## 1 temperature 6772 1 983. 3.35 0

## 2 seasons 6772 4 793. 1 0

## 3 month 6772 12 793. 2 0

## 4 dew_point_temperature 6772 1 540. 2.65 0

## 5 hour 6772 1 435. 6.5 0

## 6 seasons 0 4 0.921 3 0.736

## 7 month 0 12 0.921 4 0.736

## 8 dew_point_temperature 0 1 0.907 -3.85 0.688

## 9 year 0 1 0.775 2018. 0.249

## 10 snowfall 0 -1 0.755 0.05 0.182

## # … with 33 more rowsWe can use the results of table contained in the element frame of the result returned by the rpart() function. More specifically, the columns ncompete and nsurrogate tell us how many competitor variables and how many surrogate variables are reported in the table in the splits element, just after the primary variable.

head(classif_tree_gini$frame)## var n wt dev yval complexity ncompete nsurrogate yval2.V1

## 1 temperature 6772 6772 2606 1 0.462010744 4 5 1.000000e+00

## 2 humidity 4744 4744 990 1 0.095548734 4 3 1.000000e+00

## 4 hour 4117 4117 552 1 0.029163469 4 4 1.000000e+00

## 8 <leaf> 3097 3097 129 1 0.000000000 0 0 1.000000e+00

## 9 hour 1020 1020 423 1 0.029163469 4 5 1.000000e+00

## yval2.V2 yval2.V3 yval2.V4 yval2.V5 yval2.nodeprob

## 1 4.166000e+03 2.606000e+03 6.151802e-01 3.848198e-01 1.000000e+00

## 2 3.754000e+03 9.900000e+02 7.913153e-01 2.086847e-01 7.005316e-01

## 4 3.565000e+03 5.520000e+02 8.659218e-01 1.340782e-01 6.079445e-01

## 8 2.968000e+03 1.290000e+02 9.583468e-01 4.165321e-02 4.573243e-01

## 9 5.970000e+02 4.230000e+02 5.852941e-01 4.147059e-01 1.506202e-01

## [ getOption("max.print") est atteint -- ligne 1 omise ]We can thus create a vector that can be added to the table that contains the information on the splits.

# Position of the primary variables in `frame`

ind_primary <- which(classif_tree_gini$frame$var != "<leaf>")

type_var_df <-

classif_tree_gini$frame %>%

slice(ind_primary) %>%

select(ncompete, nsurrogate)

type_var <- NULL

for(i in 1:nrow(type_var_df)){

type_var <- c(type_var, "primary",

rep("competitor", type_var_df[i, "ncompete"]),

rep("surrogate", type_var_df[i, "nsurrogate"])

)

}

type_var## [1] "primary" "competitor" "competitor" "competitor" "competitor"

## [6] "surrogate" "surrogate" "surrogate" "surrogate" "surrogate"

## [11] "primary" "competitor" "competitor" "competitor" "competitor"

## [16] "surrogate" "surrogate" "surrogate" "primary" "competitor"

## [21] "competitor" "competitor" "competitor" "surrogate" "surrogate"

## [26] "surrogate" "surrogate" "primary" "competitor" "competitor"

## [31] "competitor" "competitor" "surrogate" "surrogate" "surrogate"

## [36] "surrogate" "surrogate" "primary" "competitor" "competitor"

## [41] "competitor" "competitor" "surrogate"This vector can then be added in splits_df:

splits_df <- splits_df %>% mutate(type_var = type_var)

splits_df## # A tibble: 43 × 7

## variable count ncat improve index adj type_var

## <chr> <dbl> <dbl> <dbl> <dbl> <dbl> <chr>

## 1 temperature 6772 1 983. 3.35 0 primary

## 2 seasons 6772 4 793. 1 0 competitor

## 3 month 6772 12 793. 2 0 competitor

## 4 dew_point_temperature 6772 1 540. 2.65 0 competitor

## 5 hour 6772 1 435. 6.5 0 competitor

## 6 seasons 0 4 0.921 3 0.736 surrogate

## 7 month 0 12 0.921 4 0.736 surrogate

## 8 dew_point_temperature 0 1 0.907 -3.85 0.688 surrogate

## 9 year 0 1 0.775 2018. 0.249 surrogate

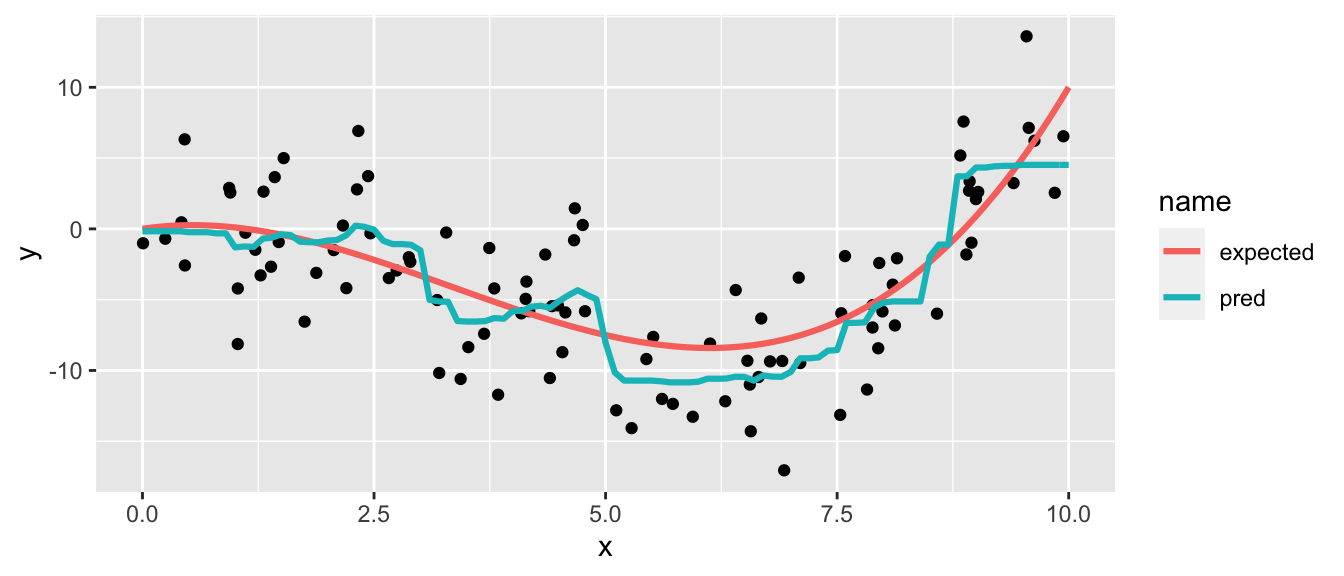

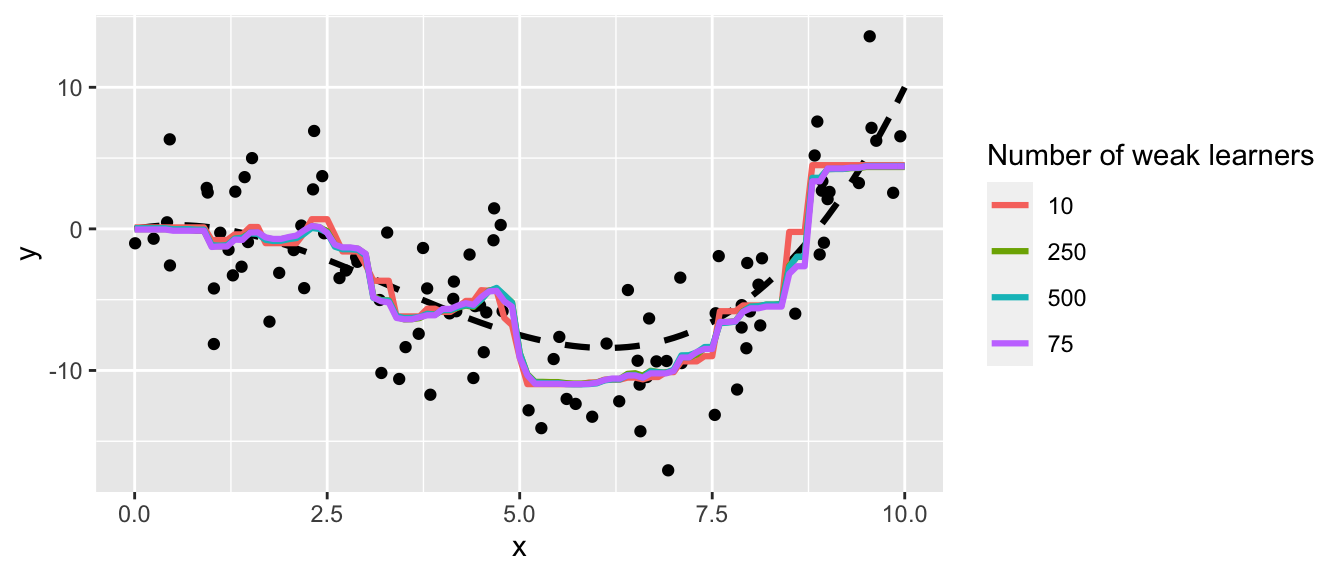

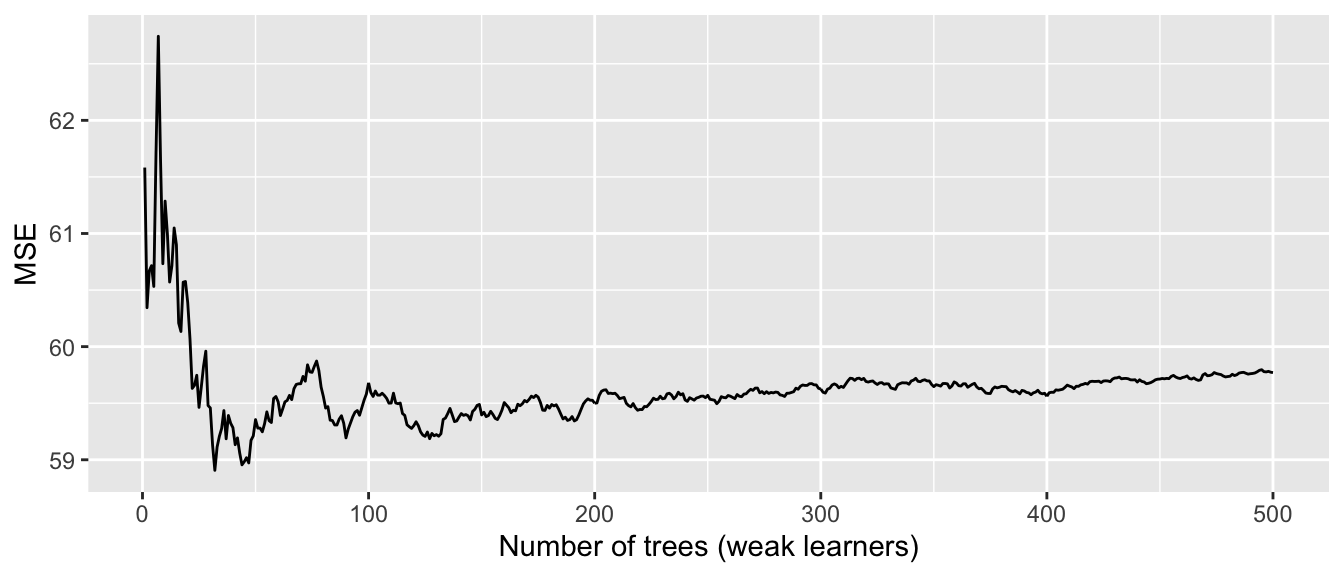

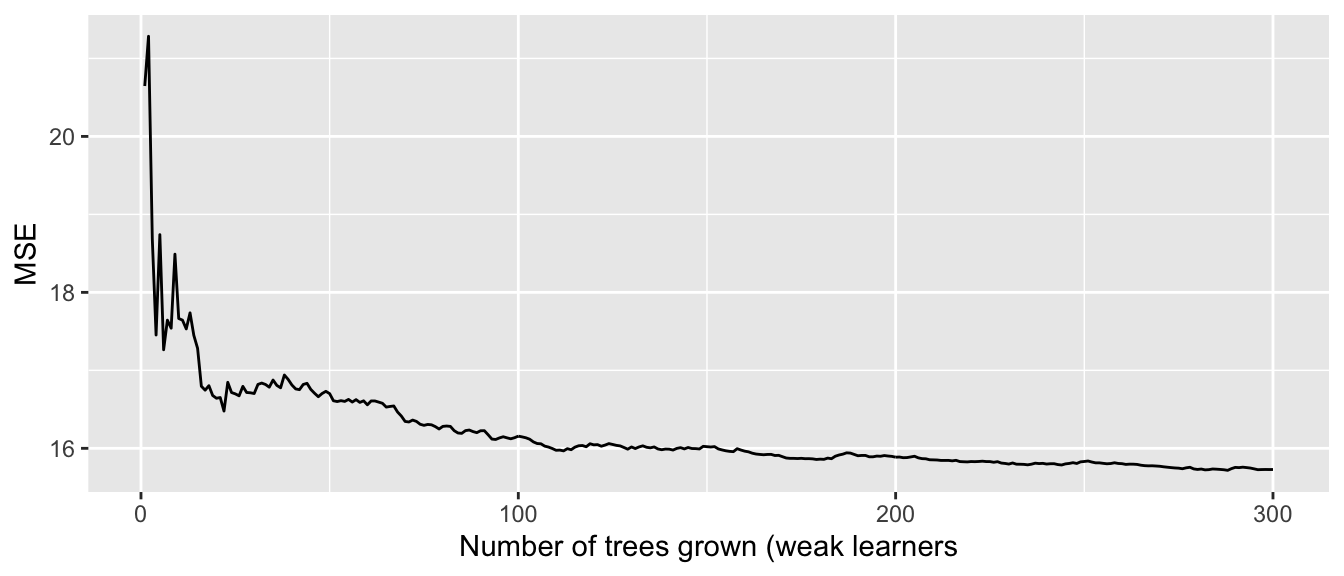

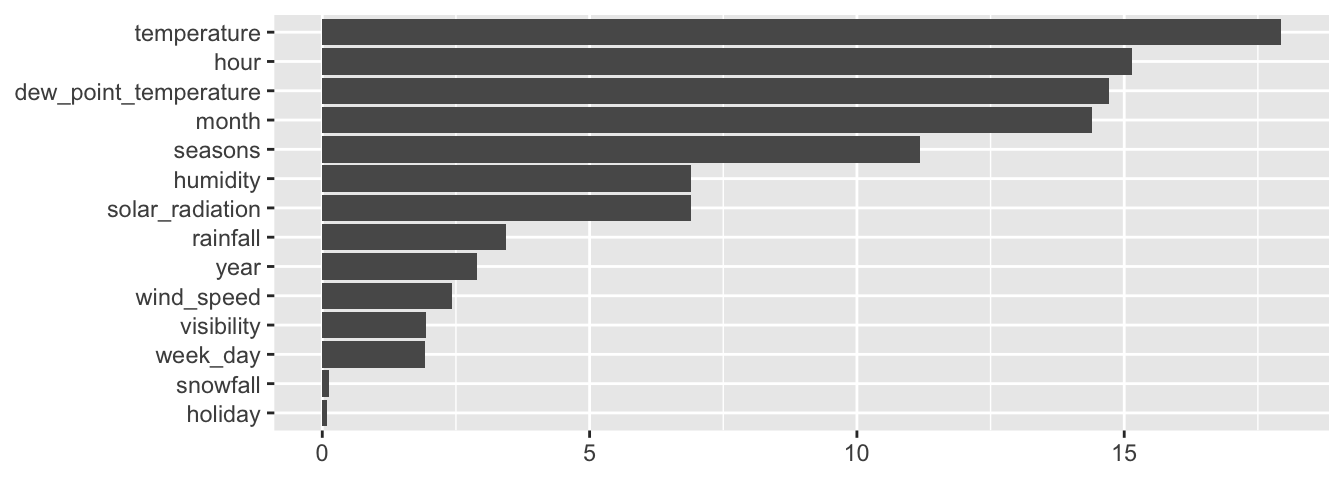

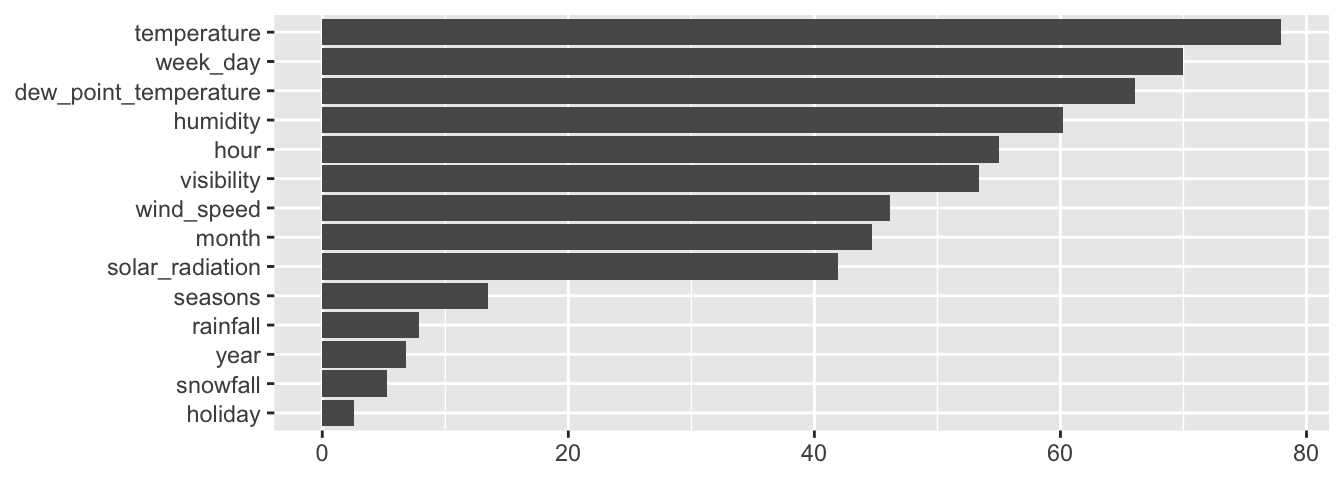

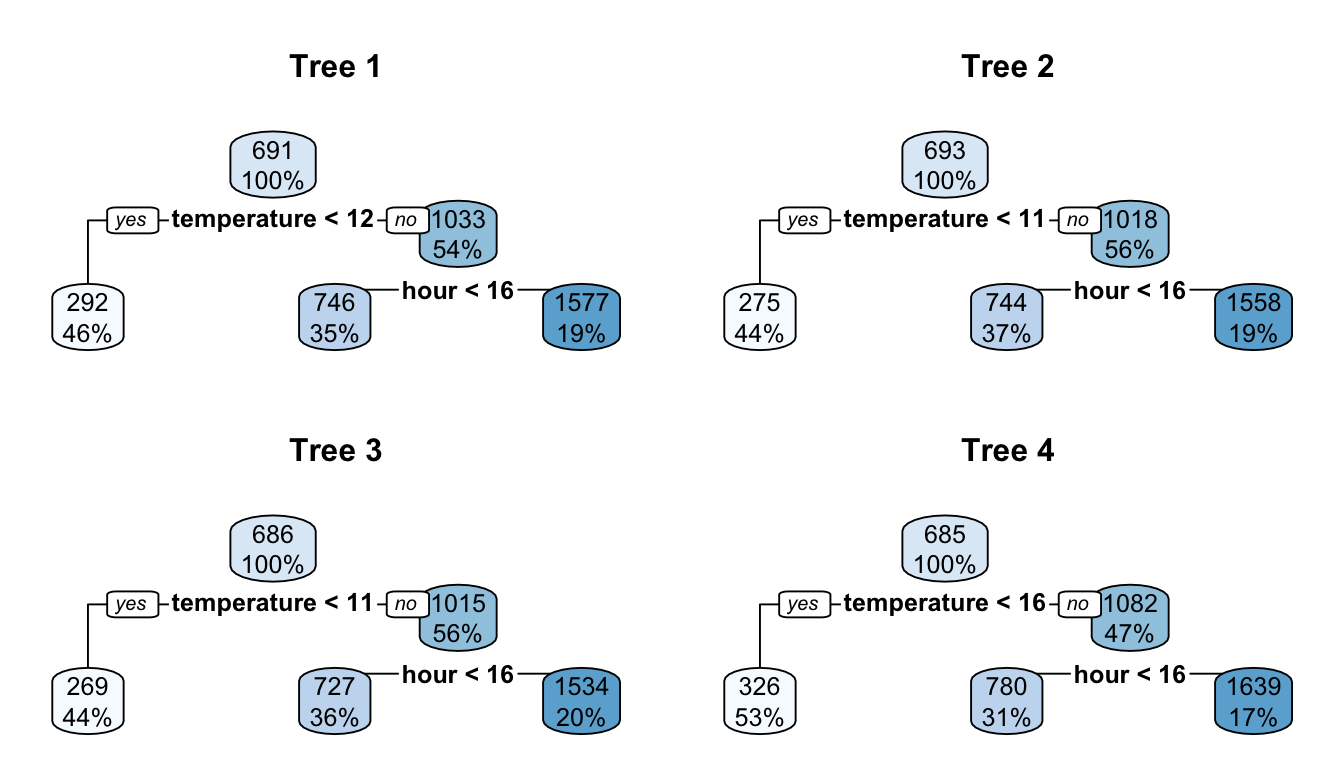

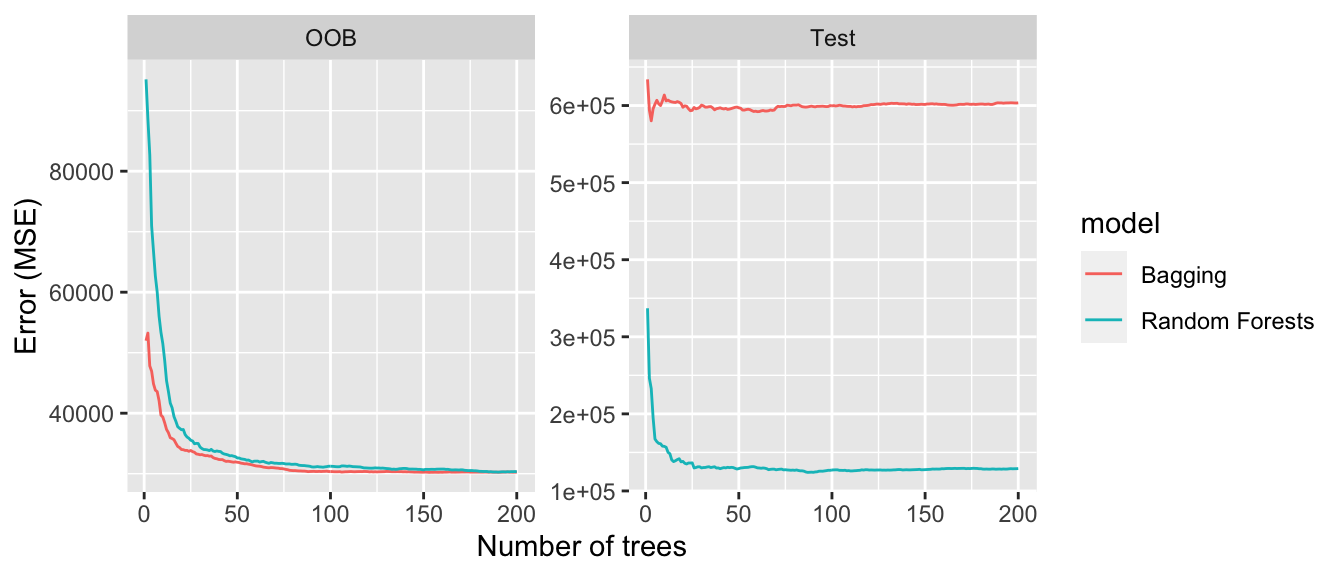

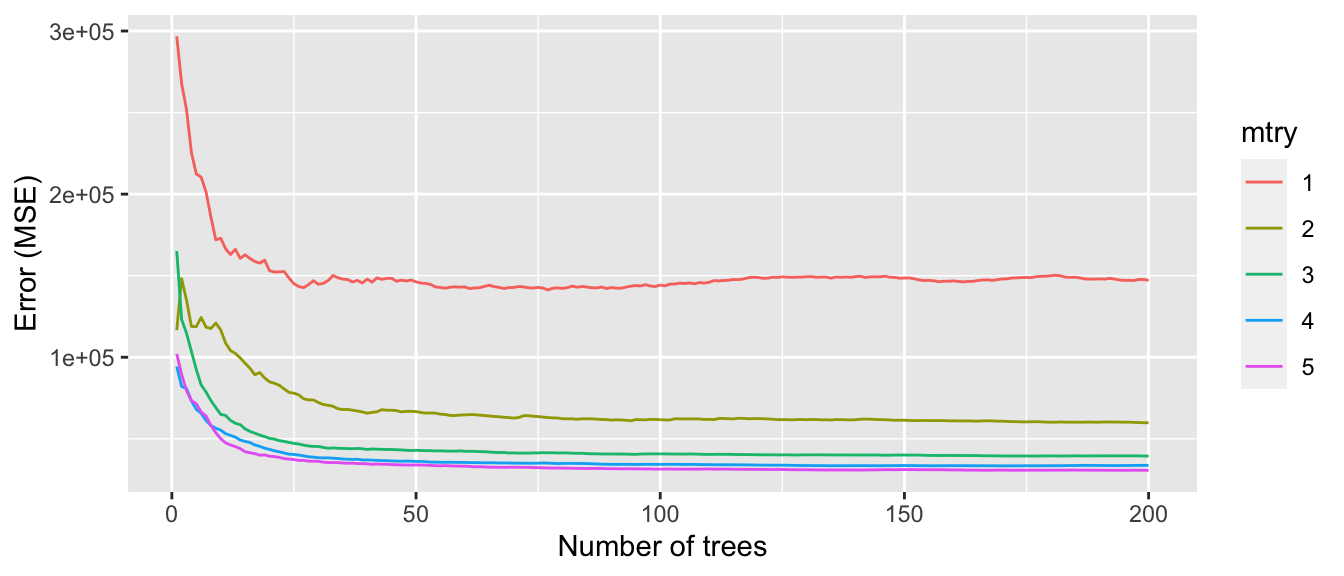

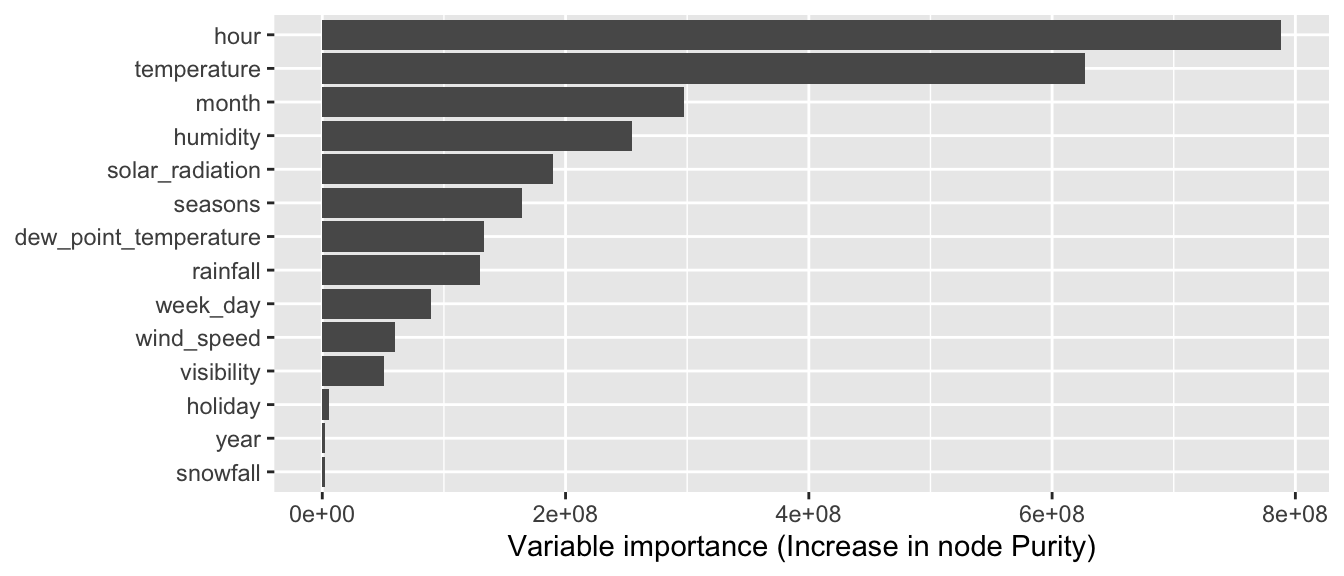

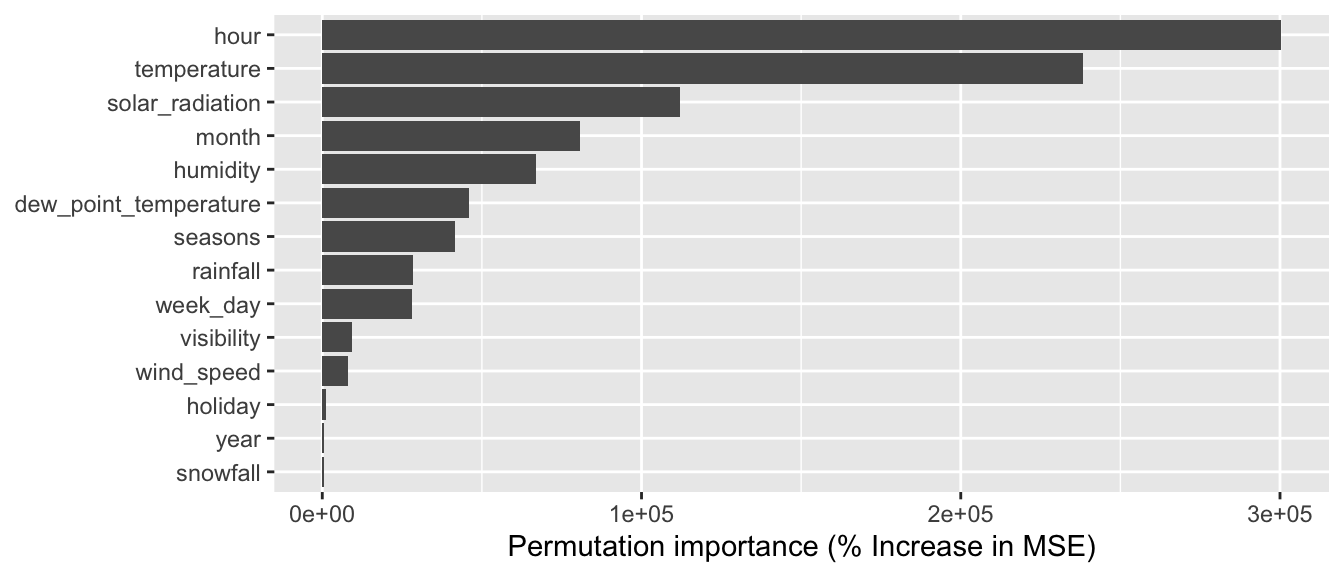

## 10 snowfall 0 -1 0.755 0.05 0.182 surrogate