From Uncertainty to Precision: Enhancing Binary Classifier Performance through Calibration

With Agathe Fernandes Machado, Arthur Charpentier, François Hu and Emmanuel Flachaire, we have uploaded our new working paper on arXiv : https://arxiv.org/abs/2402.07790

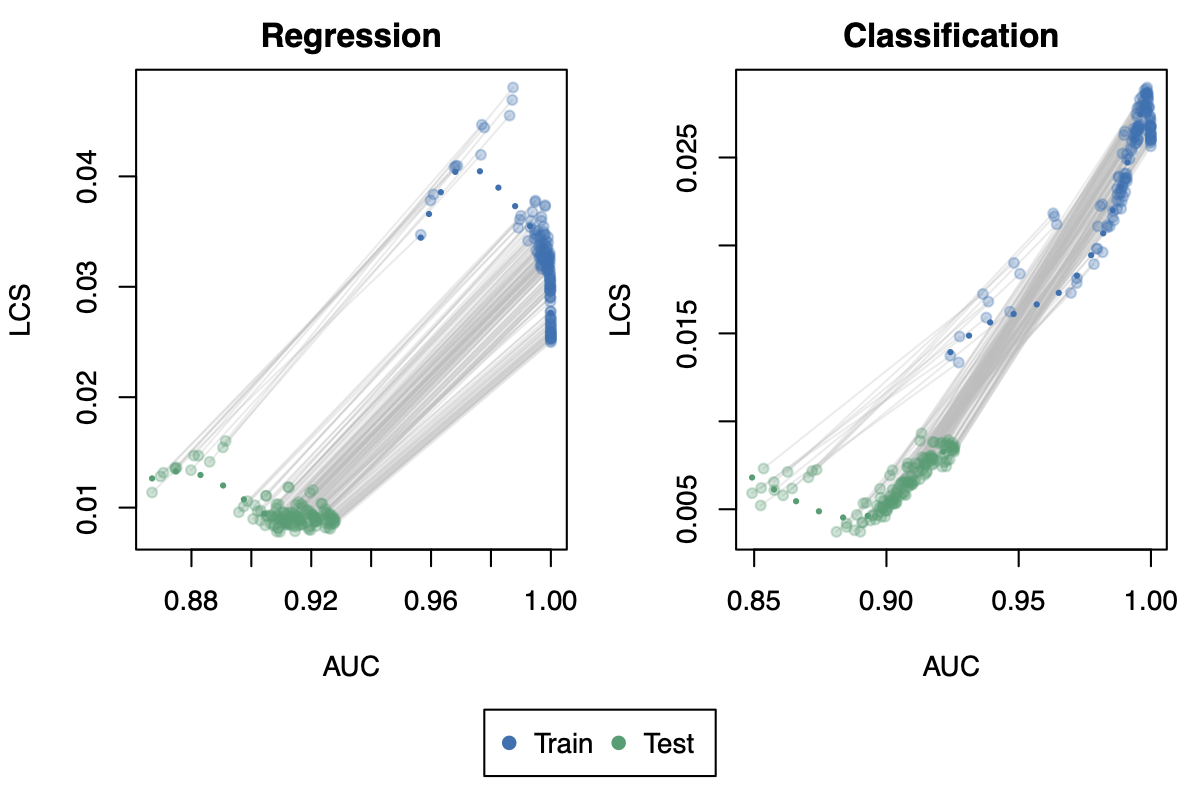

The paper focuses on the output of binary classifiers, when one is interested in the underlying probability of occurrence of the event. In the article, we review different ways to measure and visualize calibration for a binary classifier. Then, we propose a new metric based on local regression that we name the local calibration score (or LCS). Using simulated data first, we observe the impact of a poor calibration on standard performance metrics (such as accuracy or AUC). Lastly, we examine a real-world scenario using a Random Forest classifier on credit default data.

The assessment of binary classifier performance traditionally centers on discriminative ability using metrics, such as accuracy. However, these metrics often disregard the model’s inherent uncertainty, especially when dealing with sensitive decision-making domains, such as finance or healthcare. Given that model-predicted scores are commonly seen as event probabilities, calibration is crucial for accurate interpretation. In our study, we analyze the sensitivity of various calibration measures to score distortions and introduce a refined metric, the Local Calibration Score. Comparing recalibration methods, we advocate for local regressions, emphasizing their dual role as effective recalibration tools and facilitators of smoother visualizations. We apply these findings in a real-world scenario using Random Forest classifier and regressor to predict credit default while simultaneously measuring calibration during performance optimization.

A replication ebook is available on Agathe’s Github Page: codes (ebook)

The corresponding R codes are available on Agathe’s GitHub: GitHub