Geospatial Disparities: A Case Study on Real Estate Prices in Paris

With Agathe Fernandes Machado, Arthur Charpentier, François Hu and Philipp Ratz, we have uploaded our new working paper on arXiv : https://arxiv.org/abs/2401.16197

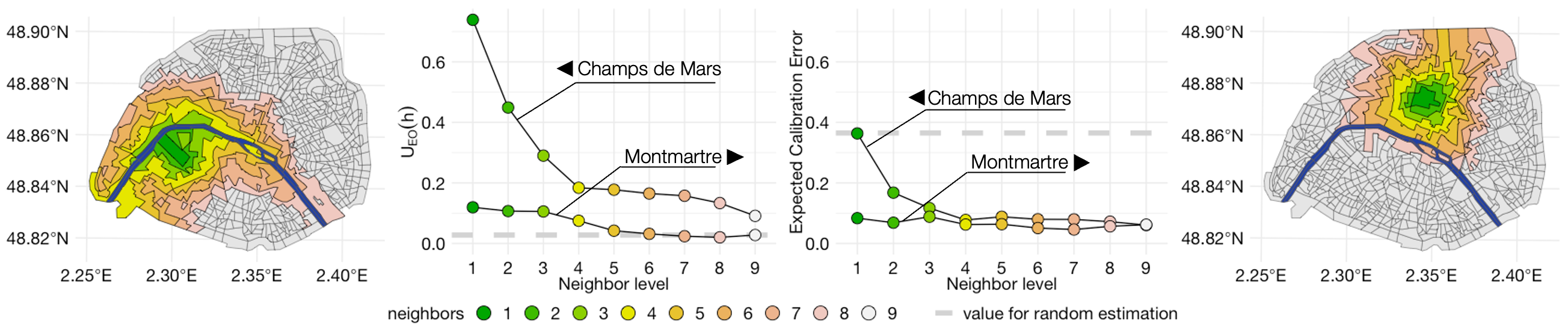

The core idea of the paper is to focus on spatial data aggregation and specifically the choice of aggregation. The choice of aggregation, whether it is conducted to represent administrative geographical areas at various levels of granularity, to maintain anonymity, or for other reasons, can lead to fairness biases. Here, we propose to assess how spatial aggregation of ordinal data can result in biases. Subsequently, we introduce a post-processing method to mitigate biases in terms of demographic parity. If the geographic aggregation zones have been defined for redlining or zoning purposes, our method allows us to treat these zones as a sensitive attribute and correct fairness biases.

Driven by an increasing prevalence of trackers, ever more IoT sensors, and the declining cost of computing power, geospatial information has come to play a pivotal role in contemporary predictive models. While enhancing prognostic performance, geospatial data also has the potential to perpetuate many historical socio-economic patterns, raising concerns about a resurgence of biases and exclusionary practices, with their disproportionate impacts on society. Addressing this, our paper emphasizes the crucial need to identify and rectify such biases and calibration errors in predictive models, particularly as algorithms become more intricate and less interpretable. The increasing granularity of geospatial information further introduces ethical concerns, as choosing different geographical scales may exacerbate disparities akin to redlining and exclusionary zoning. To address these issues, we propose a toolkit for identifying and mitigating biases arising from geospatial data. Extending classical fairness definitions, we incorporate an ordinal regression case with spatial attributes, deviating from the binary classification focus. This extension allows us to gauge disparities stemming from data aggregation levels and advocates for a less interfering correction approach. Illustrating our methodology using a Parisian real estate dataset, we showcase practical applications and scrutinize the implications of choosing geographical aggregation levels for fairness and calibration measures.

A replication ebook is available on Agathe’s Github Page: codes (ebook)

The corresponding R (and python) codes are available on Agathe’s GitHub: GitHub